An AI SEO strategy aligns your content, site structure, and authority signals so that large language models can correctly interpret and surface your brand inside AI-driven search interfaces. This is a core part of search engine optimization in 2026 if you want to avoid losing traffic and learn how to capture it from a new channel instead.

The SeoProfy team was among the first to start testing optimization hypotheses for AI-driven search back in 2022. Since then, we’ve developed practical methods that already generate traffic and visibility from these new search surfaces. Keep reading to learn the most effective, proven approaches to building your AI visibility in 2026.

- AI search is now part of the search ecosystem. It doesn’t replace traditional SEO optimization, but it changes where and how visibility happens within a broader digital marketing strategy.

- Structure and clarity matter more than ever. Clear organization, consistent language, and well-defined concepts make content easier to reuse in artificial intelligence systems.

- AI visibility requires ongoing adaptation. This is not a one-time setup, but a process of testing, refining, and adjusting as AI search keeps developing.

Why AI-Ready SEO Matters Now

Right now, everyone is saying that you need to optimize for AI search, but very few people clearly explain why. Let’s start with why an AI-ready SEO strategy is actually necessary and see if it really matters now.

Rise of LLM-powered Answer Engines

LLM-powered answer engines started breaking into mainstream search in late 2022, when OpenAI released ChatGPT (Nov 30, 2022). In early 2023, Microsoft pushed the format into search results with the new Bing/Bing Chat.

Google followed with its generative search experiment in May 2023 and later rolled out AI Overviews broadly in the US in May 2024, aiming to reach over a billion people by year-end. By Q1 2025, Google reported that AI Overviews reached 1.5B+ monthly users.

As these systems moved from experiments to default search experiences, it helps to examine how LLMs generate answers. In typical transformer-based LLMs, generation works roughly as follows:

- Text becomes tokens, and the model only sees tokens: Chunks of text, often sub-words.

- Tokens become vectors (embeddings) that the model can do math on: Two embedding worlds usually exist: LLM internal embeddings (used during generation) and retrieval embeddings (used by search/retrieval layers to find relevant chunks in an index).

- Transformer attention builds context: The model runs many layers of self-attention. Each layer calculates how strongly tokens relate to one another.

- The model generates output via next-token prediction: It estimates P (next token | all previous tokens) and selects tokens using deterministic or probabilistic decoding.

- Training defines language behavior: During pretraining, the model learns language structure, reasoning patterns, and statistical associations from large text corpora.

- Generation is constrained by available context: The model conditions only on a limited context window. In search systems, this context also includes a restricted set of retrieved text fragments.

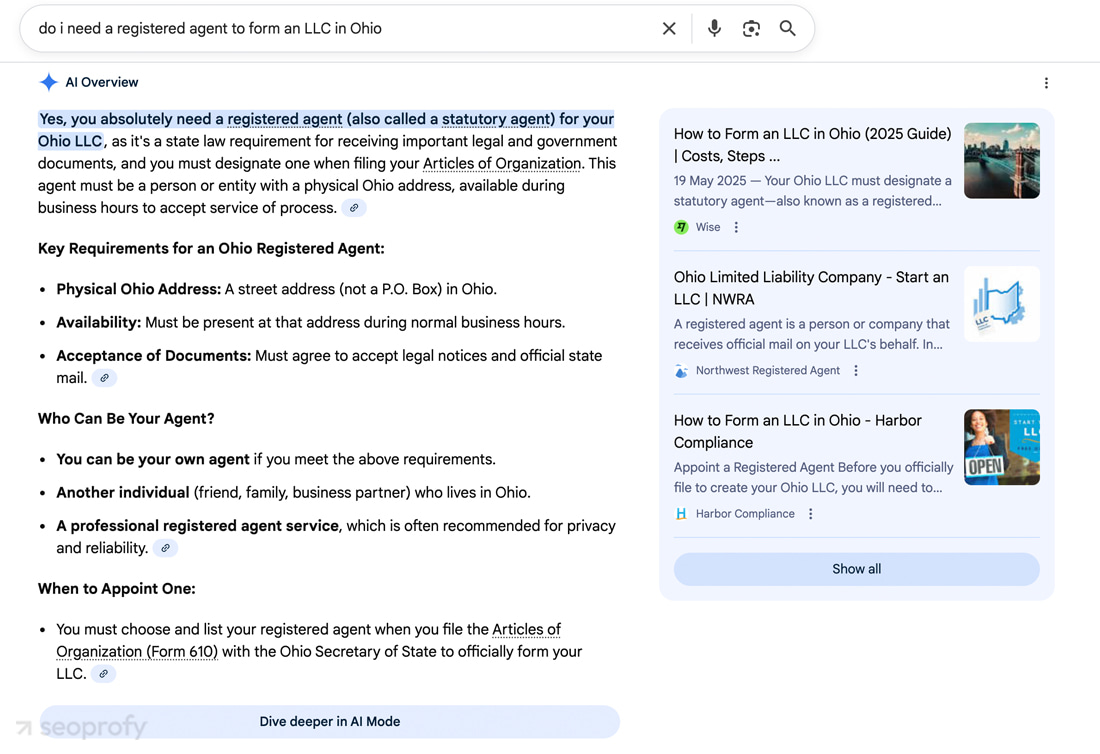

Surging Zero-click Behavior

So, generative AI answers now sit between the query and the search results. In 2025, Google’s AI Overviews appeared in 13.14% of all U.S. desktop searches in March, while back in January, it was only 6.49%, and further growth was expected. This means that more and more users get a short answer immediately and never make it to the familiar ten blue links. This is especially true for informational queries, which make up 88.1% of AI Overview triggers.

Imagine we need to run the query “Do I need a registered agent to form an LLC in Ohio?” Before, we had to search, click, and read a full article on an RA/LLC formation service site just to understand whether this step was even required when starting a business.

Now, the answer shows up instantly: yes, it is. And after that, what are the chances we keep scrolling and actually click through to an agent’s site?

This naturally impacts website traffic and leads to declines, even if you continue to hold strong keyword rankings and stable top positions in organic search results. By March last year, 27.2% of U.S. searches ended without a click, compared to 24.4% in March 2024. Over the same period in the EU and UK, zero-click searches increased from 23.6% to 26.1%.

At the same time, the number of search engine users is not decreasing. The organic traffic is still there. This just means that strategies now need to adapt so it can also be captured from a new channel: AI systems.

Retrieval Patterns Before and After AI Answers

Before artificial intelligence, everything worked in a fairly straightforward way: a user entered a query, the search engine looked for relevant pages, ranked them, and showed a list of links. Retrieval was mostly page-based. Yes, there were snippets and featured blocks, but the basic unit was still the page.

The strongest signals were:

- Exact matches between the query and the text

- Page structure

- Links and overall domain authority

In essence, retrieval answered one question: Which pages best match this query?

With the arrival of AI, retrieval became fragment-based and task-oriented. Now, the AI agents first try to understand what kind of answer is needed, and only then look for material to assemble it. So, instead of working with full pages, it works with pieces of text inside them.

The strongest signals now are:

- Semantic relevance at the fragment level

- How well a fragment fits the implied task behind the query

- Clarity and self-sufficiency of individual text passages

- Consistency between related fragments across sources.

Retrieval no longer decides which pages to show. It decides which pieces of information are even available to construct an answer. And from what we see in practice, there are ways to nudge that decision by giving the system more to work with.

Traditional SEO vs AI-Ready SEO

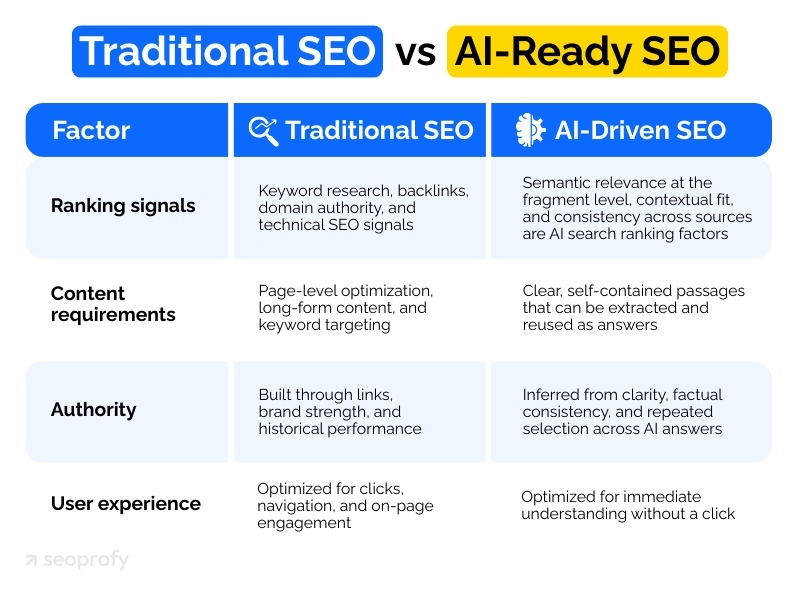

This whole shift is easier to see side by side. The table below compares traditional SEO with what optimization has to account for once AI answers enter the picture.

| Traditional SEO vs AI-Ready SEO | ||

|---|---|---|

| Factor | Traditional SEO | AI-Driven SEO |

| Ranking signals | Keyword research, backlinks, domain authority, and technical SEO signals | Semantic relevance at the fragment level, contextual fit, and consistency across sources are AI search ranking factors |

| Content requirements | Page-level optimization, long-form content, and keyword targeting | Clear, self-contained passages that can be extracted and reused as answers |

| Authority | Built through links, brand strength, and historical performance | Inferred from clarity, factual consistency, and repeated selection across AI answers |

| User experience | Optimized for clicks, navigation, and on-page engagement | Optimized for immediate understanding without a click |

The AI-Ready Website Blueprint

Through long-term testing and by studying real-world results across AI optimization in the market, we can confidently say that a well-structured, AI-ready website can give you real advantages. Specifically:

- AI-powered search visibility: Your content can show up directly inside AI answers. That means visibility even when users do not scroll or click in the traditional sense.

- Brand authority without a click: When AI systems repeatedly pull fragments from your site, your brand becomes part of the answer itself. Users may not visit immediately, but they start recognizing where the information comes from.

- Referral traffic that still converts: Clicks that do happen seem to be more intentional. Users arrive after already understanding the basics, which usually means higher intent and better conversion quality.

- Protection against zero-click search: Search behavior is shifting, but demand is not disappearing. An AI-ready site keeps you present inside this new layer instead of losing visibility as more queries end without clicks.

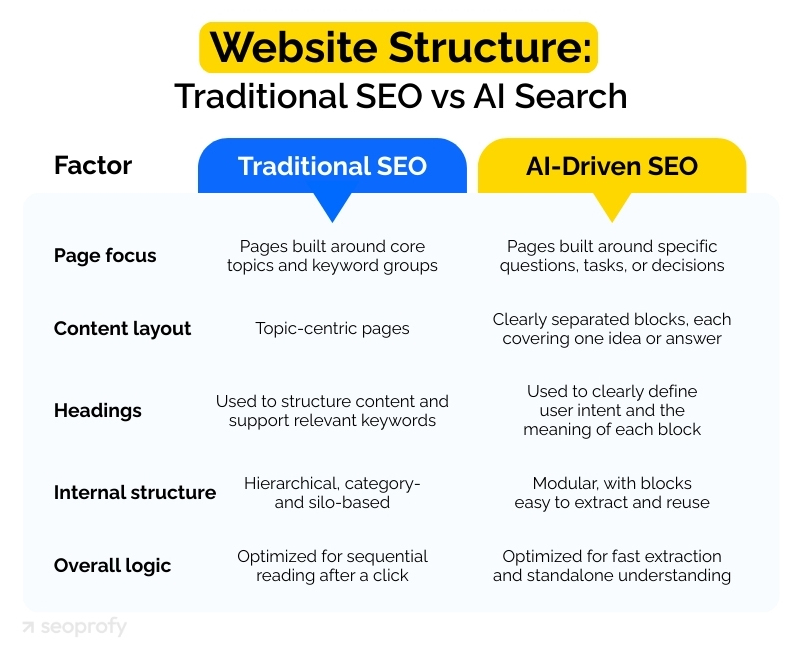

So, how is an AI-ready website blueprint different from traditional on-page optimization approaches used in search?

| Website Structure: Traditional SEO vs AI Search | ||

|---|---|---|

| Site element | Traditional SEO | AI-Driven SEO |

| Page focus | Pages built around core topics and keyword groups | Pages built around specific questions, tasks, or decisions |

| Content layout | Topic-centric pages | Clearly separated blocks, each covering one idea or answer |

| Headings | Used to structure content and support relevant keywords | Used to clearly define user intent and the meaning of each block |

| Internal structure | Hierarchical, category- and silo-based | Modular, with blocks easy to extract and reuse |

| Overall logic | Optimized for sequential reading after a click | Optimized for fast extraction and standalone understanding |

Now, let’s go through these elements in more detail. This is the groundwork that needs to be in place before moving on to the framework later in the article.

Structured Information Architecture

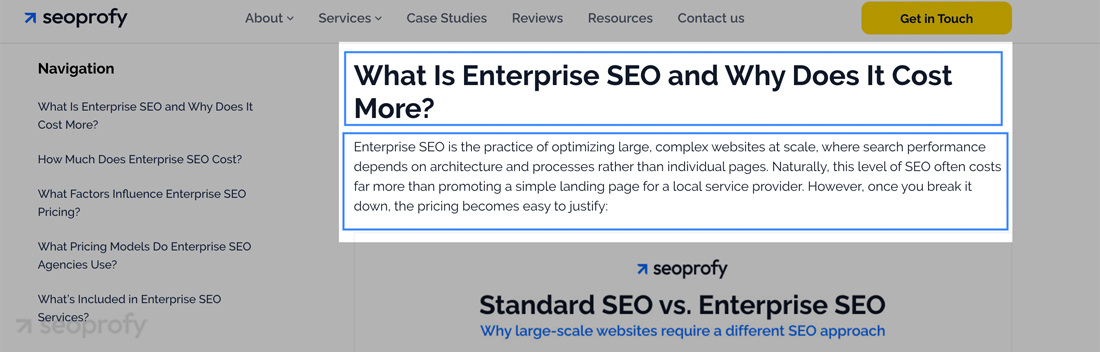

Structured information architecture is a core part of optimizing content for LLM-based retrieval systems. There are two main aspects here: topical clustering and breaking information down into headings, sections, and definitions.

Topical clustering is a controlled semantic space. The denser and more logically structured it is, the higher the probability that an LLM will select it for retrieval instead of relying on an averaged web context. Our team has identified several reasons for this.

- When definitions, processes, constraints, and examples live within the same cluster, the model is less likely to encounter conflicting signals.

- Retrieval chaining becomes simpler, since clusters make it more likely that the model can sequentially extract related pieces of information without stepping outside the domain.

- The probability of source anchoring increases as well, because repeated reinforcement of the same entities and relationships allows the site to act as a reference source rather than a scattered supplier of facts.

Headings, sections, and definitions improve LLM parsing because they provide the model with an explicit logical hierarchy of meaning. As a result, it reduces the cognitive load on the model and increases the accuracy of retrieval and synthesis.

What structural elements specifically provide:

- Headings: The model understands where a new statement begins and in which context it should be interpreted.

- Sections: This increases the likelihood of correct chunking and reuse of a fragment without distortion.

- Definitions: These create anchor points for entities, allowing the model to safely reference a term without inferring its meaning from fragmented sentences.

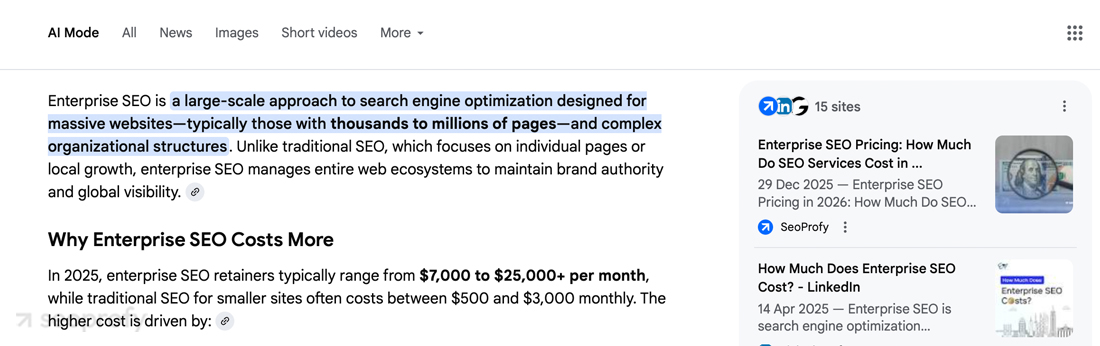

For example, this is how it works in practice with one of our recently published blog articles. You see a question-style heading, followed by a definition and a short answer right away. One idea, one answer, in a separate block.

Results? One day after publication, this fragment surfaced in AI Overviews, along with some other properly structured blocks from the same article.

Use this checklist to audit whether your site structure is actually readable for LLMs:

- Each page belongs to one clear topical cluster

- Definitions, processes, constraints, and examples live close to each other

- One section = one idea

- Headings describe meaning, not just hierarchy

- Sections can stand on their own

- Definitions are explicit and unambiguous

- Related entities and concepts repeat consistently within the cluster

- No cross-topic leakage inside a block

If most of these points hold true, the site structure is already aligned with how LLMs retrieve and assemble information.

Entity-first Content Design

During retrieval, LLMs are solving one task: can this fragment be safely taken and inserted into an answer? To do that, they need to understand two things: what entities are present here and how they are related to each other. Conceptually, the model operates with structures like:

- Entity A → is a type of → Entity B

- Entity A → is used for → Process C

- Entity A → depends on → Condition D

These relationships are formed statistically through repetition, stability of phrasing, and the absence of contradictions. For example, a glossary block appears in the text:

AI Visibility Score is a metric that shows how often a website is used as a source in LLM-generated answers, including AI Overviews.

Across the rest of the site, the term is used strictly in this same meaning. It does not turn into an action, a process, a goal, an abstract outcome, whatever. The model may see a stable entity and start treating it as a reference point.

If the relationships are fuzzy, the model would probably either fall back to an averaged web context or not use the source at all. This leads to a direct conclusion.

Explicit definitions can improve AI ranking because they:

- reduce ambiguity during entity resolution

- make repeated extraction easier

- increase trust in the source as a carrier of a specific entity.

JSON-LD is secondary here, but still useful. It fixes the entity name and type outside of natural language phrasing and reduces the risk of substitution with a similar term. For example:

{

“@type”: “DefinedTerm”,

“name”: “AI Visibility Score”,

“description”: “A metric measuring how often a site is retrieved by LLM-based answer engines.”

}

Clear, Compressed, Skimmable Writing

At this point, we already know that LLMs first split text into chunks and then evaluate each chunk separately. Chunks that make sense on their own are more likely to be used.

This is where the format that has recently regained popularity comes from, often referred to as the Exploding Topics style. It is an established way of presenting information where the text initially looks like a set of ready-made statements.

- The point is stated immediately, without a lead-in

- Each paragraph contains one idea

- Sentences are short and self-contained

- There are almost no filler phrases that carry no factual value.

This format originally emerged for fast human scanning, but it turned out to align perfectly with how LLMs segment and evaluate content.

A strong example in this format, where each line stands on its own, looks like this:

AI visibility is a measure of how often a website or page is used in LLM-generated answers, including AI Overviews and conversational search. High AI visibility means the content is regularly extracted and embedded into answers without being rewritten.

Now, for comparison, a weaker, not compressed version:

AI visibility is a rather complex and multifaceted concept that has become actively discussed in professional circles in recent years due to the development of artificial intelligence and changes in how search engines operate, which has generally influenced how websites may appear in answers generated by large language models.

The AI-Ready SEO Action Framework

Now, we move to the main part: the framework we use in practice when working on SEO for AI search engines. It brings together everything covered above and turns this into a clear sequence of actions you can apply to your website.

Step 1. Discover AI Visibility Opportunities

This step starts with prompt testing. This is done in a standard public LLM interface, most often in ChatGPT. You come into the chat with ready-made formulations of search queries that reflect your target audience, taken from:

- Google Search Console (long queries and questions from the Queries report)

- The People Also Ask block in Google or tools like AlsoAsked, which are also rich for search trends

- Real user questions from support and sales.

With these formulations, you go into an LLM and consistently test the same user search intent using different wording. For example, it may seem like this is just a different wording of the same query:

- How to improve AI visibility

- How to get cited in AI answers

Testing quickly makes it obvious that, for the model, these are different answer scenarios.

- Improve AI visibility → “How to become a suitable source.”

- Get cited in AI answers → “How to be selected and explicitly named as a source.”

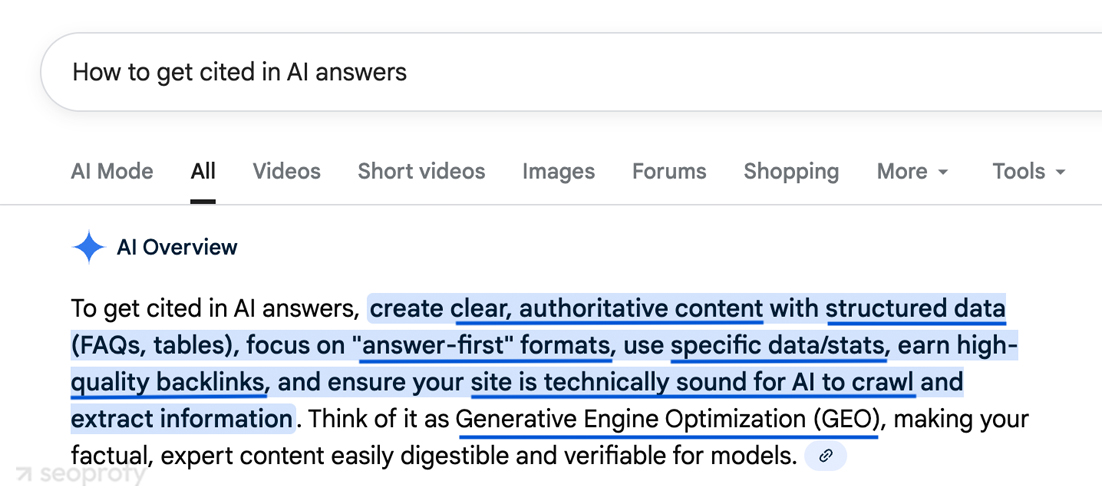

After that, you need to go to Google and look at AI Overviews for the same formulations. This is where you can see which terms and which explanation logic are used in the automatically assembled answer.

Next comes entity graph analysis. You open the AI Overview for a given query, extract the key terms from the answer text, and fix the relationships as simple statements like “A influences B,” “A is measured through C,” “A is used in D.”

Then you open the website and check, term by term, whether there is an explicit definition, whether the term is used consistently across different pages, and whether the same relationships are described. Everything that appears in the model’s answer but is not explicitly fixed on the site becomes a growth opportunity.

Step 2. Upgrade Page Authority (Not Just Backlinks)

At this step, the focus shifts from discovering opportunities to strengthening the weight of specific web pages. In the AI-driven search results, page authority is formed as a combination of signals, not as a simple sum of inbound links:

- The first layer is the internal hierarchy: A page needs to occupy a clear position within the site structure. When key clusters consistently link to one page as a reference point, the model receives a priority signal. These links must come from content with closely related entities and matching intent.

- The second layer is the context of external links: External mentions work more strongly when they confirm the same entity and the same role of the page. Backlinks from relevant materials, research, guides, and comparisons reinforce the perception of the page as a source. Here, it is useful to rely on deliberate link building strategies focused on topics and entities, not on the sheer number of domains.

- The third layer is signal consistency: Headings, anchor texts, surrounding link language, and page descriptions need to align semantically. When a page is consistently described using the same language, its role within the topic becomes fixed.

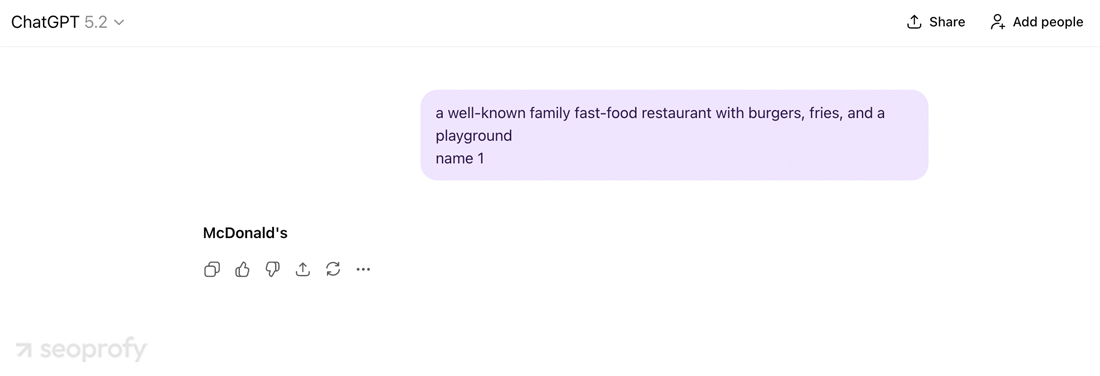

If we say “a well-known family fast-food restaurant with burgers, fries, and a playground,” you will almost certainly think of McDonald’s. LLMs work in a similar way.

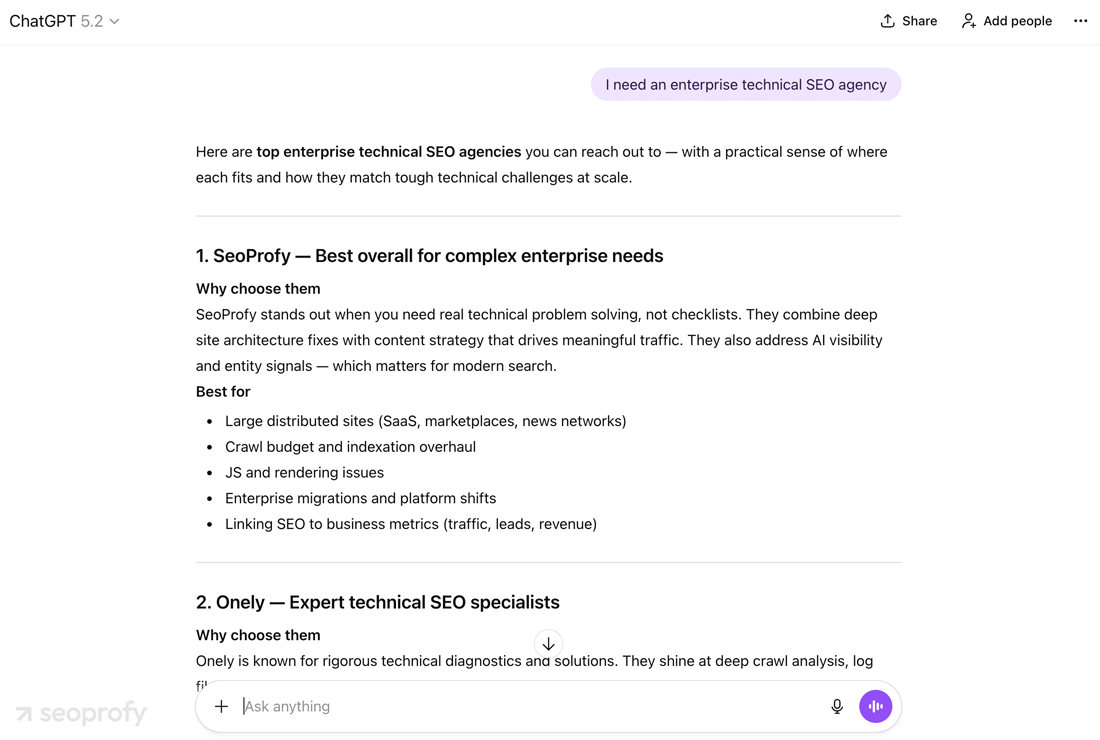

They need to be trained to associate your brand with specific entities. For example, through consistent signal work, ChatGPT connects our brand, SeoProfy, with many such entities. For example, technical search engine optimization is one of the areas where this association forms. We regularly highlight its results in our case studies, which then get picked up in industry listicles.

Step 3. Reformat Content for LLM Interpretability

At this stage, it makes sense to rely on the recommendations already outlined above around structure and content quality. The core principles remain the same:

- One clear idea per section

- A readable heading hierarchy

- Cleanly separated blocks

- Predictable page logic

Next, the main focus shifts to entity-driven optimization. Content creation is built around key entities that matter for the topic and the business. Core concepts appear early in each block, repeat in the same form, and play the same role across different pages. This speeds up entity recognition, helps connect them, and forms a stable understanding of the site’s subject area.

Other structural elements play a supporting role. Short paragraphs, direct phrasing, and lists with self-contained items increase the suitability of the text for extraction and reuse in AI-generated answers.

As a result, the content starts to resemble reference material. This format is easier to analyze and simpler to use when assembling AI answers. At the same time, it is important to maintain a balance between informational value and reader interest. We’re firmly in the human-first camp by default, both on our own site and in client work, while accepting that serving users, search engines, and AI systems at the same time is only getting more complicated.

Step 4. Measure → Refine → Iterate

For measurement and refinement, it can be useful to introduce an AI visibility scoring scale. This helps capture the current level of a site’s presence in AI-generated answers and track how it changes over time. The scale divides AI visibility into several tiers, each describing how exactly the content is being used.

- Tier 1. Background usage: The content is used sporadically. Phrasing is paraphrased. The site’s participation in answers is hard to reproduce across similar queries.

- Tier 2. Consistent inclusion: The model regularly uses facts, definitions, and content structure. Entities are recognized consistently. Usage repeats across similar formulations.

- Tier 3. Source recognition: The content acts as a reference source. Phrasing is partially reproduced. The brand or domain is read as being associated with the core topic.

- Tier 4. Authoritative reference: The site is perceived as a primary source. Definitions and answer logic are reproduced close to the original. The brand is stably linked to the key entities of the topic.

After the level is fixed, a refinement cycle begins. Entities are strengthened, phrasing is clarified, and structure is adjusted. Measurement should then be repeated using the same scale. This approach turns work on AI visibility into a manageable process, applied to something that would otherwise seem impossible to control.

How to Measure LLM Visibility and AI Search Performance

To refine and iterate, you need a clear way to observe how your site shows up in AI answers over time. Below is a practical plan for doing this step by step.

Manual Tracking

The baseline starts with manual checks that we discussed earlier in the article. You need a set of priority queries and to test them directly in public AI interfaces: ChatGPT, Gemini, Claude, and Perplexity.

The goal is to understand which sources the model considers suitable for answering. You look at whether phrasing, definitions, or concepts matching your content appear, and you record any mentions of brands or frameworks.

Prompt-style Benchmarking

The next step is to make these checks reproducible. For this, a fixed set of prompts is created, usually 5–10 per topical cluster. These formulations are saved and regularly run through different models.

You track how often the brand appears, the context in which it is used, and the type of information the model pulls in. This approach makes it possible to compare results month over month and see trends.

SERP-to-AI Deltas

Then comes an important comparison between the classic SERP and AI answers. Google rankings and participation in LLM answers often diverge. Pages may sit at the top of search engine results pages and still be rarely used by an AI model.

At the same time, content with moderate search engine rankings can regularly appear in AI answers thanks to a clear structure and well-defined blocks. These gaps show why AI visibility needs to be measured separately.

Spreadsheet Template

For consistency, it’s convenient to maintain a simple spreadsheet. It includes the query, the prompt used, the model, the AI visibility tier, the type of content used in the answer, mentioned competitors, and notes on gaps. This file is easy to update weekly or monthly and use as a working audit.

Automated Signals via Ahrefs

Automated monitoring adds another layer. AI-powered SEO tools like Ahrefs Alerts and Brand Mentions help track growth in mentions and links to content. Spikes in these signals often coincide with content starting to be actively used by AI models and then cited across blogs and aggregators. Similar spikes for competitors point to growing authority within the AI ecosystem.

Get clear direction on how AI systems interpret, reuse, and surface your content. Our SEO AI audit pinpoints where visibility breaks, and then maps the actions needed across:

- AI Overviews coverage analysis

- LLM prompt testing

- Entity and content gap identification

Conclusion

As you can see, an AI SEO strategy is not a replacement for optimizing for search engine algorithms, but an additional layer that works alongside it. Search keeps changing, and this is simply the next shift you need to adapt to.

And if this feels like a lot to untangle on your own, that’s normal. This space is still new, and most SEO teams are figuring it out as they go. If you want support from people who have already tested, built, and validated AI search strategies in real projects, SeoProfy’s AI SEO services can help.

Our team works hands-on with AI websites’ visibility, entity mapping, content optimization, and authority signals that actually show up in AI answers. We focus on practical execution, results, and, most importantly, ROI. If you want a clear starting point, book a free consultation, and we’ll walk through your current AI visibility together.