Most of our articles focus on how to get noticed by Google and reach the top of search results. But an SEO shopping cart presents a unique case — they were never meant to be the center of attention. The main point of your cart is to make it easy for customers to finish their purchases.

The dilemma is that Google may still discover and show your cart pages in its search results. This creates problems, as it consumes Google’s time exploring pages that don’t need to be prioritized. In this article, we’re flipping the usual SEO advice. We present the shopping cart SEO best practices to keep your cart pages out of sight from search engines while ensuring they work for actual shoppers.

- Cart pages should stay off search engines because they’re just for shoppers, not for search rankings.

- A truly SEO-friendly shopping cart needs simple URLs, quick loading times, solid security (make sure it’s HTTPS), and a design that works well on mobile.

- When search engines spend their time crawling cart pages, they’re wasting your site’s crawl budget.

- Without proper search engine optimization, dynamic cart URLs can create multiple versions of the same page, which can cause problems with duplicate content.

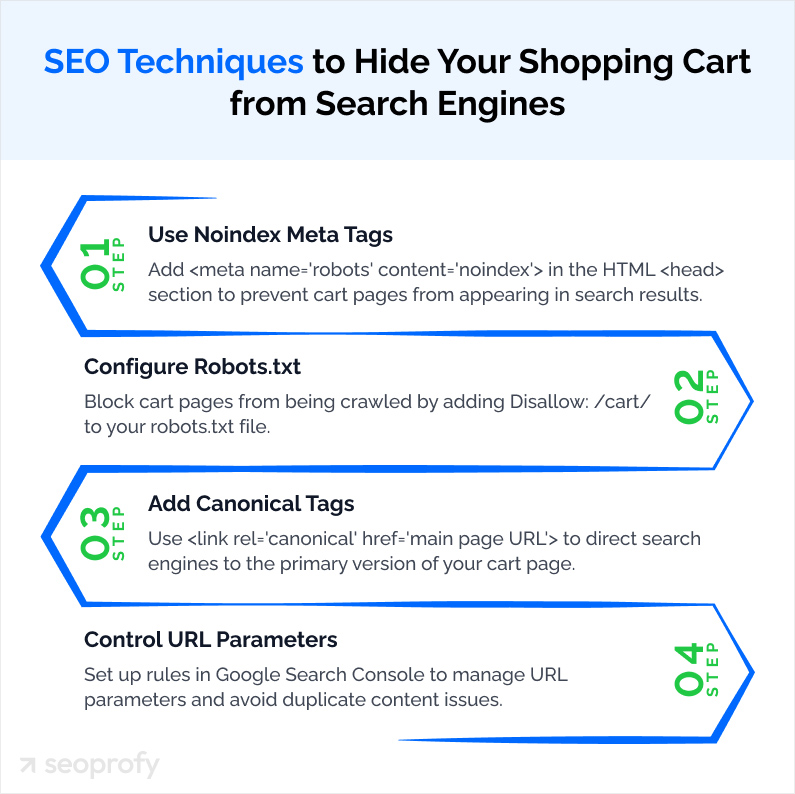

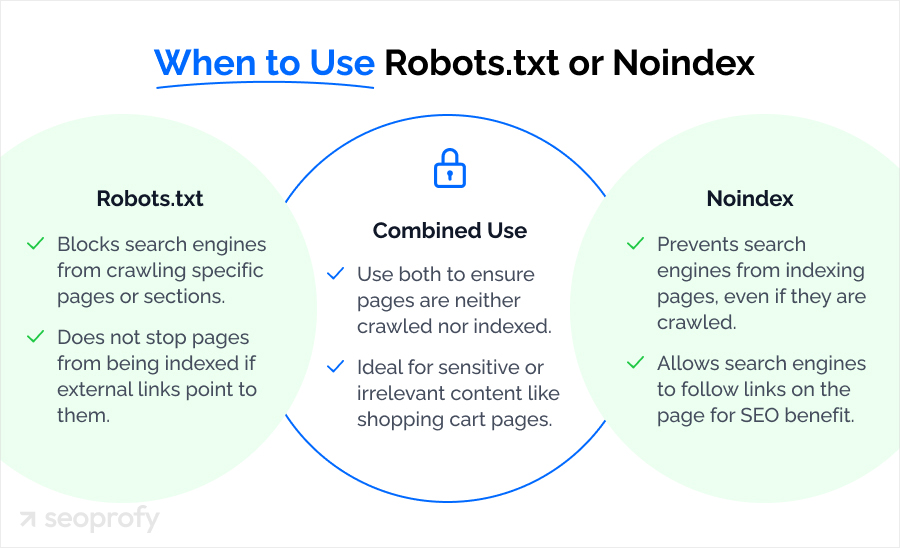

- There are four main methods to control search engines: URL parameter controls, canonical tags, robots.txt, and noindex meta tags.

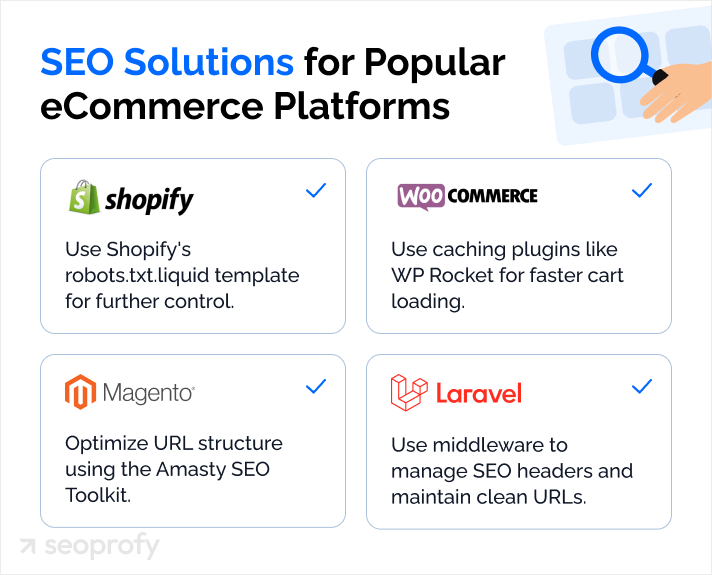

- Each eCommerce platform (Shopify, WooCommerce, Magento, Laravel) has its own unique ways to set up cart SEO best practices.

What Makes a Shopping Cart SEO-Friendly?

At first glance, asking about SEO-friendly shopping carts might seem odd. We mean, if we don’t want to let search engines find our cart pages, why bother with making them SEO-friendly?

But here’s the deal: While a shopping cart page isn’t for Google searching, SEO is still necessary to ensure Google crawls and indexes your other pages better.

Plus, after all, the goal of search engine optimization isn’t just to attract more visitors to your website but to boost conversions. This means you need to put in the work not only for Google but also for the shoppers who come to your site and want to complete their purchases there. User-friendly equals SEO-friendly, too.

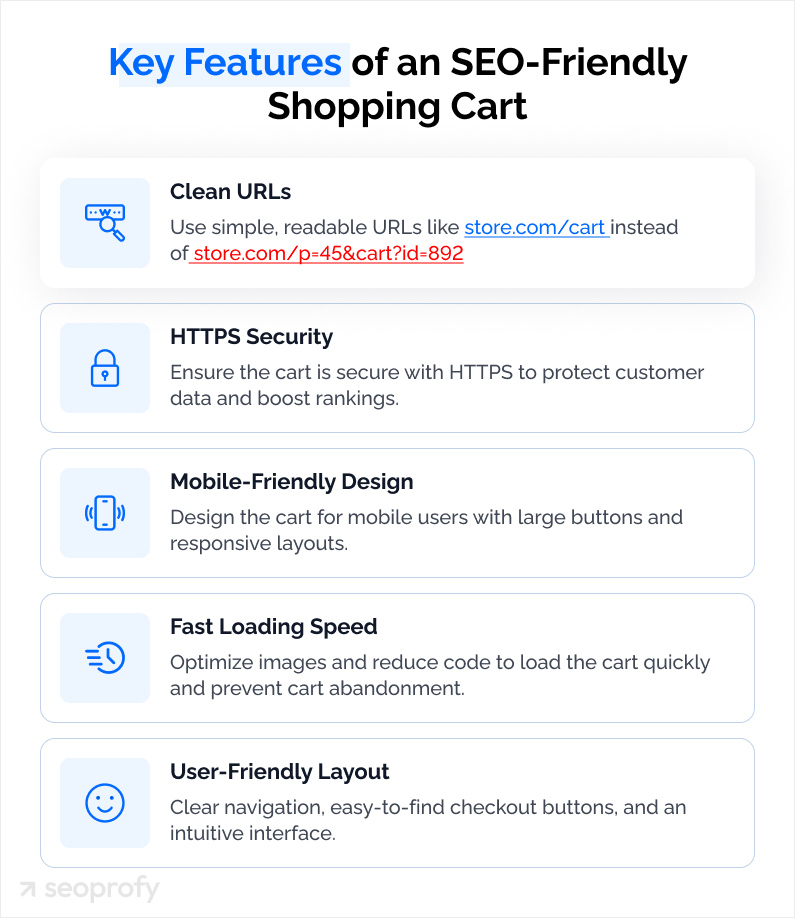

A good shopping cart needs a few key important things to be SEO-friendly:

- Clean URLs: Instead of a confusing jumble like “store.com/p=45&cart?id=892”, go for simple addresses like “store.com/cart.” This makes it easier for both search engines and shoppers to know where they’re at in your online store.

- Page loading speed: Nobody likes waiting in line, and your customers won’t wait for a slow cart to load. Every extra second can mean lost sales. Keep your cart quick and light by trimming unnecessary code and making images load faster.

- Security: That little padlock (HTTPS) shows both visitors that your cart is secure. Google even gives a ranking boost to secure eCommerce websites, so it’s a win for everyone.

- Mobile design: Mobile-friendly design — search engine friendly design. If your cart looks weird on mobile or buttons are too tiny to press, you’re also missing out on sales.

Why Shopping Cart Pages Should not Be Indexed

Online shopping cart pages serve as private areas that hold items someone plans to buy. They’re only relevant to one customer at a time, which makes them dynamic and prompts Google to check these pages every time a URL gets updated.

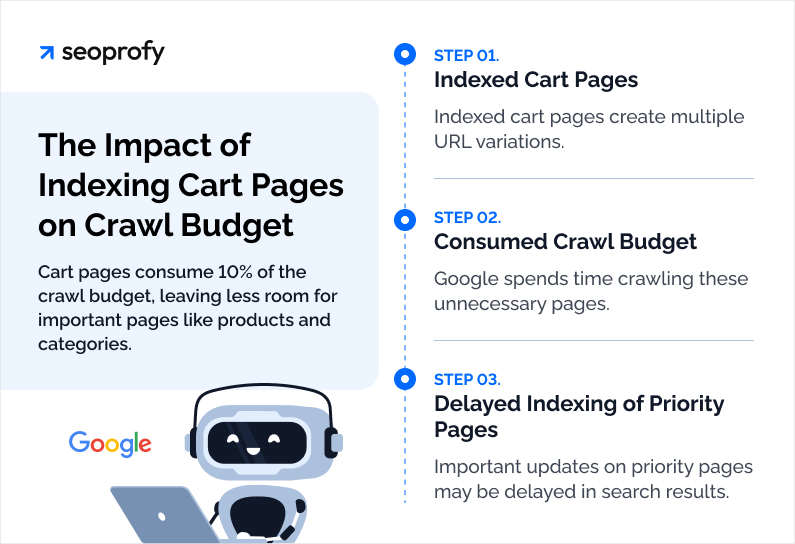

However, search engines can only devote a limited amount of time to reviewing the pages on your website — we call it a crawl budget. It indicates the maximum number of pages that Google will explore on your website within a specific timeframe. Your crawl budget largely depends on your site’s authority, update frequency, server response speed, and overall site performance.

So, when search engines waste time looking at cart pages, they might overlook the real gems: your product landing pages and useful shopping guides.

Impact on SEO and Crawl Budget

Every time a customer interacts with your cart, new links are created. For example:

- Adding a product: shop.com/cart?add=123

- Changing quantity: shop.com/cart?add=123&qty=2

- Applying coupon: shop.com/cart?add=123&qty=2&coupon=SAVE10

Each of these links looks like a new page to search engines. If you multiply this by thousands of customers, you can see how fast cart pages can eat up your crawl budget.

When search engines spend their time checking these ever-shifting links, important updates might just be waiting. Let’s say you:

- Add new products

- Update prices

- Publish a new buying guide

- Launch a sale

These changes might take longer to be displayed in search results because search engines are busy sorting through temporary cart pages.

The solution is pretty simple: just tell search engines to ignore them (non-index). You can achieve this with basic technical settings on your website. This way, search engines can focus entirely on the web pages shoppers are actually seeking.

Risks of Duplicate Content

Furthermore, having cart pages visible isn’t just a waste of your crawl budget; it also exposes your site to Google’s duplicate content penalties. As we mentioned, these pages are dynamic, and if search engines can access them, they end up considering multiple versions of basically the same page.

While humans see different products in each cart, Google sees identical things over and over — shopping cart pages share the same layout, buttons for multiple payment gateways, and overall design, just have different products. The problem gets worse with other user-specific parameters, like language and currency changes.

Use canonical tags to sort this out. These tags point out that “store.com/cart” is the main page. This is how you have all those variations linked to it.

If you’d like to learn about other important aspects of search engine optimization for online stores, our SEO guide for e-commerce covers everything from technical setup to content optimization.

How to Control Search Engine Crawling and Indexing

So, search engines need clear instructions about which parts of your store to explore and which to skip. We’ve briefly reviewed some solutions before, but let’s get into each method in greater detail so that your cart pages stay under wraps for sure.

Here are the main methods to control search engine behavior:

- URL parameter controls: These inform Google how to deal with different versions of your cart URLs.

- Canonical tags: When you can’t avoid having similar cart pages, these tags direct search engines to a single main version. They help you avoid issues related to duplicate content.

- Robots.txt: This file keeps search engines from crawling whole sections of your site. It’s way more effective than managing individual URLs because it blocks search engines before they even access your cart area.

- Noindex meta tags: Your strongest tool. These tags send a clear signal to search engines: “Don’t show this page in search results, ever.”

Before you start implementing each of these tactics, you should understand which parts of your store need to be kept under wraps. Success lies in the specifics: determine which product pages should pop up in search results and which areas should be off-limits.

Want to see how your store’s SEO is doing right now? Let’s discuss how we can help:

- Address any duplicate content issues

- Speed up your store’s loading time

- Drive more organic traffic to your store

URL Parameter Controls

URL parameters are bits of information attached to the end of web addresses. Each parameter can specify different aspects of your shopping cart page, such as adding a product to the cart (e.g., ?add-to-cart=123). This is exactly what leads to duplicate content creation.

Google Search Console offers a simple way to fix this. In the Crawl Settings section, under URL Parameters, you can clarify to Google how to manage each parameter:

- Open Google Search Console

- Go to Crawl Settings > URL Parameters

- Include parameters that should be identified as not impacting page content

This is how you can explain to Google that these variations don’t need their own indexing.

Canonical Tags

There’s also another solution to handling duplicate content: canonical tags. When you have multiple versions of your cart pages, such as /product?id=123 and /product/add-to-cart?id=123, tags allow Google to recognize the page version that matters most so it doesn’t review other variations.

You should simply add a canonical link in the page’s HTML <head> section pointing to the main product page. Check this example:

<link rel=”canonical” href=”https://www.example.com/product”>

The canonical tag consists of:

- The rel=”canonical” part that identifies this as a canonical tag

- The href attribute that points to your main page URL

However, canonical tags aren’t meant to handle everything by themselves. They must be used with other methods we’ve discussed and are going to discuss further. Our e-commerce SEO company can help you decide on the best approach.

Robots.txt

A robots.txt file is a basic text document placed at the root level of your website (like yourstore.com/robots.txt), not in subfolders like (yourstore.com/shop/robots.txt). Whenever search engines visit your site, they consider this file to know whether to check out this page or skip it.

Here’s a simple example of a robots.txt file that’s set up to protect your cart pages:

User-agent: *

Disallow: /cart/

Disallow: /checkout/

Let’s break this down:

- “User-agent: *” targets all search engines like Google, Bing, and others.

- Each “Disallow” line tells them, “don’t look at these pages.”

- The forward slash after each section (/cart/) indicates “skip everything in this folder.”

Even if you use robots.txt for particular pages, they might still pop up in Google search results. This can happen when other websites link directly to your cart pages. For instance, if someone writes a blog post and includes a link to your cart page, Google might still show that cart page in the search results.

That’s why just having a robots.txt file isn’t enough. You should use other methods, like noindex meta tags.

Noindex Meta Tags

Unlike robots.txt, which can be bypassed, noindex tags provide exact orders that search engines must follow.

To keep your cart pages safe from indexing, just add this line in the HTML head part:

<meta name=”robots” content=”noindex, nofollow”>

This code tells search engines two things:

- “noindex” means keep this page out of search results

- “nofollow” means don’t check any links on this page

Or you can use <meta name=”robots” content=”noindex, follow”> if you want Google to crawl the links to other pages on your cart page and increase their ranking strength.

This won’t affect users anyway — they will still be able to click any link and shop normally.

Platform-Specific SEO Guidance

Every eCommerce platform necessitates a different web development approach to shopping cart SEO. Let’s explore how to apply our SEO methods across some of the most popular eCommerce platforms.

Shopify

Shopify makes it easy to keep your cart pages hidden from search engines, but you need to know where to look and what to change.

Open your theme.liquid file. Add this meta tag in the <head> section:

<meta name=”robots” content=”noindex, nofollow”>

Be sure to apply this conditionally so it only affects your cart pages.

Every Shopify store already has a ready-to-go robots.txt.liquid template. If you want to edit it, go to Editing robots.txt.

You can also use Shopify’s Liquid variables to create dynamic canonical tags:

<link rel=”canonical” href=”{{ canonical_url }}”>

In addition, you may consider using additional apps for Shopify, like SEO Manager and Smart SEO, to customize meta tags and schema markups.

WooCommerce

To properly handle cart, checkout, and account pages in WooCommerce, install an SEO plugin first. Yoast SEO and Rank Math work great here.

If you require more specific control, you can implement a custom PHP snippet in your WooCommerce setup that dynamically adds noindex meta tags to cart and checkout pages:

add_action(‘wp_head’, function () {

if (is_cart() || is_checkout()) {

echo ‘<meta name=”robots” content=”noindex, nofollow”>’;

}

});

Additionally, you can add the following rules to your robots.txt file to exclude parameterized cart URLs:

Disallow: /cart/

Disallow: /*?add-to-cart=

And if you want your cart pages to load faster, check out a caching plugin like WP Rocket.

Magento

To protect your Magento shopping cart software, use its built-in robots.txt editor. Add these rules to block cart and checkout pages:

User-agent: *

Disallow: /checkout/

Disallow: /cart/

Also, add noindex meta tags via XML layout updates:

<reference name=”head”>

<action method=”addItem”>

<type>name</type>

<name>robots</name>

<content>NOINDEX, NOFOLLOW</content>

</action>

</reference>

For better URL management and canonical tag configuration, you may install the Amasty SEO Toolkit extension. It’s a lifesaver if you have a large store with many products.

Your cart pages need to be fast, too. You can make it happen by using Varnish Cache and optimizing server-side rendering.

Laravel

When doing SEO for Laravel’s shopping cart, you should use Blade templates to add noindex tags dynamically to cart pages:

@if(request()->is(‘cart’))

<meta name=”robots” content=”noindex, nofollow”>

@endif

You can also control search engine access using Laravel middleware. Add this code to manage SEO headers for cart-related routes:

public function handle($request, Closure $next)

{

if ($request->is(‘cart’)) {

response()->header(‘X-Robots-Tag’, ‘noindex, nofollow’);

}

return $next($request);

}

For web loading speed optimization, pay attention to Laravel-specific caching tools like Laravel Cache and Redis.

Finally, Laravel’s routing configuration helps maintain clean and understandable cart URLs.

Conclusion

While shopping cart SEO may appear to be a minor aspect of your store, it can have a significant impact. No one types “shopping cart page” into a search bar, right? That’s why keeping these pages out of search results is a win-win for both your rankings and your customers.

After you put into action all the best practices listed here, stay vigilant. A small error can affect your rankings and might even lead to Google penalties. It’s advisable to regularly check your cart pages with tools like Screaming Frog, Google Search Console, and Lighthouse. They help confirm that the pages in question are appropriately hidden and load fast.

Need a hand with these steps? SeoProfy knows how to make sure your customers use an SEO-friendly shopping cart.