For many online stores, the biggest pain point is seeing their best product listings unindexed and hidden from shoppers, costing their business valuable traffic and sales. So how do you avoid it? If you’ve ever wondered how to index every ecommerce site in existence, read on, as today we’ll share actionable strategies to ensure your store’s pages are properly indexed and visible to potential customers.

Online store indexation ensures your products appear in search results when shoppers are looking for them. If search engines can’t properly crawl your site, parts of your inventory may stay out of search results. That is quite a common issue for ecommerce stores, which have complex layouts, broken links, or duplicate pages.

The good news? Solving these problems doesn’t require a massive overhaul. In our guide, we are going to show practical tips on how to structure your sitemaps, fix crawl errors, clean up duplicates, and ultimately speed up the indexing process so shoppers can easily find your products and purchase from you.

- Ecommerce site indexing is critical for making your products visible in search results.

- Common issues that influence how Google discovers your pages include crawl budget limitations, 4xx errors, and missing/outdated sitemaps.

- To prepare your site for indexing, you’ll need to optimize your site structure, create XML sitemaps, and configure robots.txt and meta tags.

- Duplicate content can waste your crawl budget and is often caused by CMS-generated tags or ecommerce filters that create multiple URLs for the same page.

The Basics of Ecommerce Site Indexing

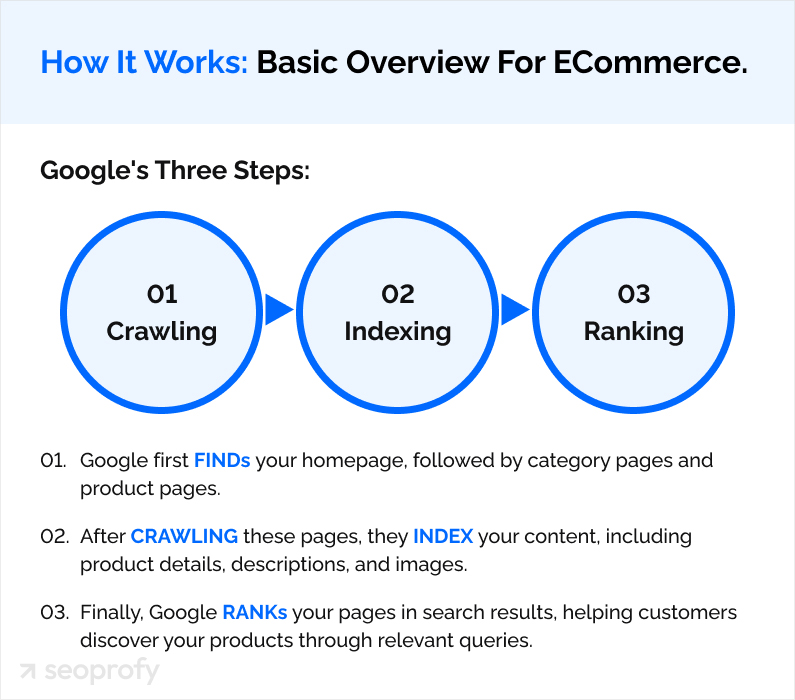

Search engines like Google use indexing to understand and organize your site’s content so they can show it to people searching for relevant products. Let’s break it down step by step:

What Is Indexing in SEO?

Indexing is how search engines discover, scan, and organize your website’s pages. It starts with Google bots, also known as crawlers, which are specific types of user agents. These tools visit your site, follow links, and gather details about your content images, metadata (titles and descriptions), and internal linking. For ecommerce sites, this process is crucial for ensuring that ecommerce category pages and product listings gain visibility.

As soon as crawlers collect this data, search engines decide whether to include your page in their index—a database where all the information about web pages is stored and organized. They later rank them based on relevance to the search query.

Why Indexing Matters for Ecommerce Sites

Indexing is the cornerstone of visibility for ecommerce websites. Without it, your carefully crafted product pages remain invisible to search engines, effectively cutting off a primary channel for attracting customers. Proper search engine indexing ensures your ecommerce category pages and products are eligible to appear in results, enabling customers to discover your offerings.

The impact of indexing on traffic and sales cannot be overstated. Unindexed pages mean missed opportunities—shoppers can’t buy what they can’t find. Proper indexing directly correlates with increased visibility, leading to higher website traffic and, ultimately, more conversions. This is particularly critical for online stores, where every unindexed product listing represents a potential loss in revenue.

Beyond just appearing in search results, proper indexing ensures that all product pages, from high-demand items to niche offerings, are accessible to potential customers. Comprehensive indexing allows search engines to crawl and understand your entire inventory, ensuring that shoppers searching for specific products or categories can easily find what they need. For ecommerce businesses aiming for growth, indexing is not just a technical necessity—it’s a business imperative.

Common Issues in Indexing Large Ecommerce Sites

Ecommerce websites with lots of inventory often have to deal with the following issues:

Crawl Budget Limitations

Search engines allocate a certain “crawl budget” to every website, which is the number of web pages they’ll crawl during a given time. If you run a big ecommerce site, they might not get to all your pages because they only have so much time to crawl it. This means some of your high-priority site sections could be skipped.

Broken Links

A broken link is a hyperlink that leads to a page that no longer exists or can’t be accessed. These links waste your crawl budget, prevent pages from being discovered, and create a poor user experience (UX), which influences your rankings in SERP.

Missing or Outdated Sitemaps

A sitemap shows Google all the pages and products on your site so they can display them in search engine results. If you don’t have one, search engine crawlers might overlook key sections of your site and leave them unindexed. It also helps visitors navigate your site to find what they need and discover items they didn’t know about.

Duplicate Content

Ecommerce stores often have problems with duplicate content. Filters, sorting options, and session IDs can create multiple URLs that lead to the same product.

For example, the same item might have different URLs depending on the category it’s viewed under or the attributes selected, like size or color. This can make it harder for search engines to determine which page to rank and can also affect indexing.

Regional or language-specific versions of product pages can also trigger duplication if search engines aren’t correctly informed about the differences between them. Without proper tagging, these pages can be seen as duplicates rather than unique entries.

As you can see, there are several problems ecommerce websites have to deal with. So sometimes partnering with an experienced ecommerce SEO company can be your best bet.

They have specialists who can navigate the technicalities of ecommerce stores and understand the nuances of different CMS platforms. It might save you time and money and help you see results much faster.

By addressing these common problems and focusing your SEO efforts on proper indexing strategies, you can ensure that every product and category page is visible in search engine results. With tools like XML sitemaps, robots.txt configuration, and structured data, ecommerce stores can take full advantage of Google indexing to drive traffic and sales.

Now let’s move on to how you can prepare your site so it’s crawled, indexed, and seen by your potential customers.

Steps to Prepare an Ecommerce Site for Indexing

Here are some ecommerce and technical SEO best practices you can follow to help Google find all pages on your site:

Optimize Site Structure

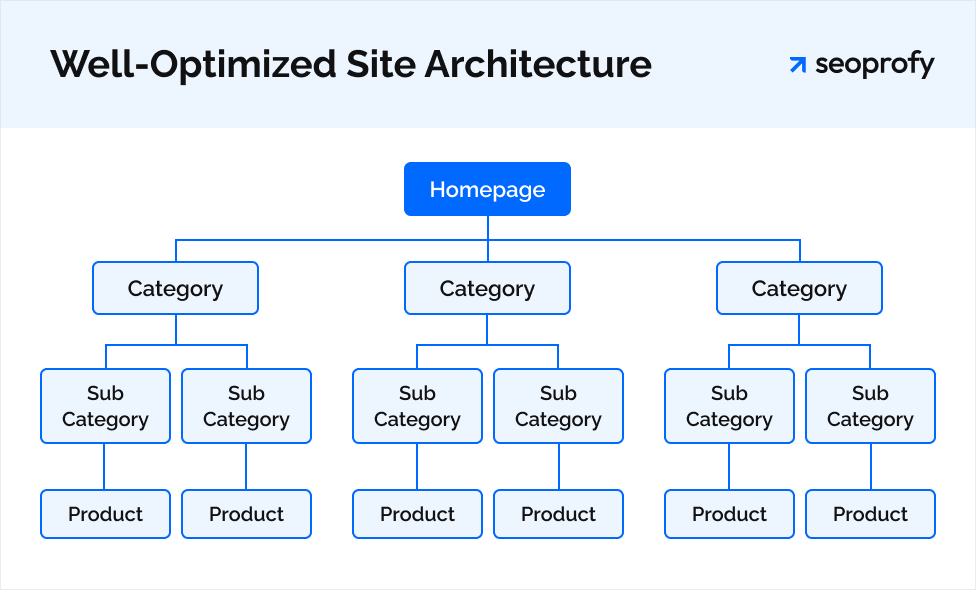

A good site architecture is the first step. In short, it’s how you organize the content and links on your website. The main goal here is to enable search engines to reach your pages easily by following links in your navigation.

Start with Categories and Subcategories

Arrange your products into logical folders. Link your menus to categories, categories to subcategories, and subcategories to product pages.

If you sell clothing, your main categories might be “Men,” “Women,” and “Kids. Under “Men,” you might have subcategories like “T-Shirts,” “Jeans,” and “Shoes.” Use your site’s content management system (CMS) to create these groups and link products to the appropriate categories.

If your category pages don’t link directly to all products, Google won’t be able to find them. Products that can only be accessed through a search box are often missed because crawlers don’t search your site the way a person does.

To fix this, make sure every product has a clickable link from your category or subcategory pages. If this isn’t possible, you can use a sitemap or Google Merchant Center feed to show Google where those products are.

Also, try to use regular clickable links (like <a href>), not JavaScript or other methods, so search engines can easily follow them and index your pages.

Add Structured Data

Structured data is a great way to help Google read your site’s content more accurately. It also highlights important details like product prices, availability, and reviews in search results.

Shoppers are often at different stages of their shopping journey. Some might be ready to buy, while others might just compare products or read reviews. So these additional details may often appeal to them and nudge them to purchase from you.

One of the common types of structured data for ecommerce is BreadcrumbList. In short, breadcrumbs show the hierarchy of your site. To implement breadcrumbs:

- Use the BreadcrumbList schema to show the path from the homepage to the product pages.

- Add breadcrumb markup in JSON-LD format directly to your website’s code or use a plugin if you’re on a platform like Shopify or WordPress.

- Test your breadcrumb implementation using Google’s Rich Results Test tool.

Other useful structured data types include:

- Product: For showing details like price, availability, and ratings.

- Review: For highlighting customer feedback.

- LocalBusiness: For stores with physical locations.

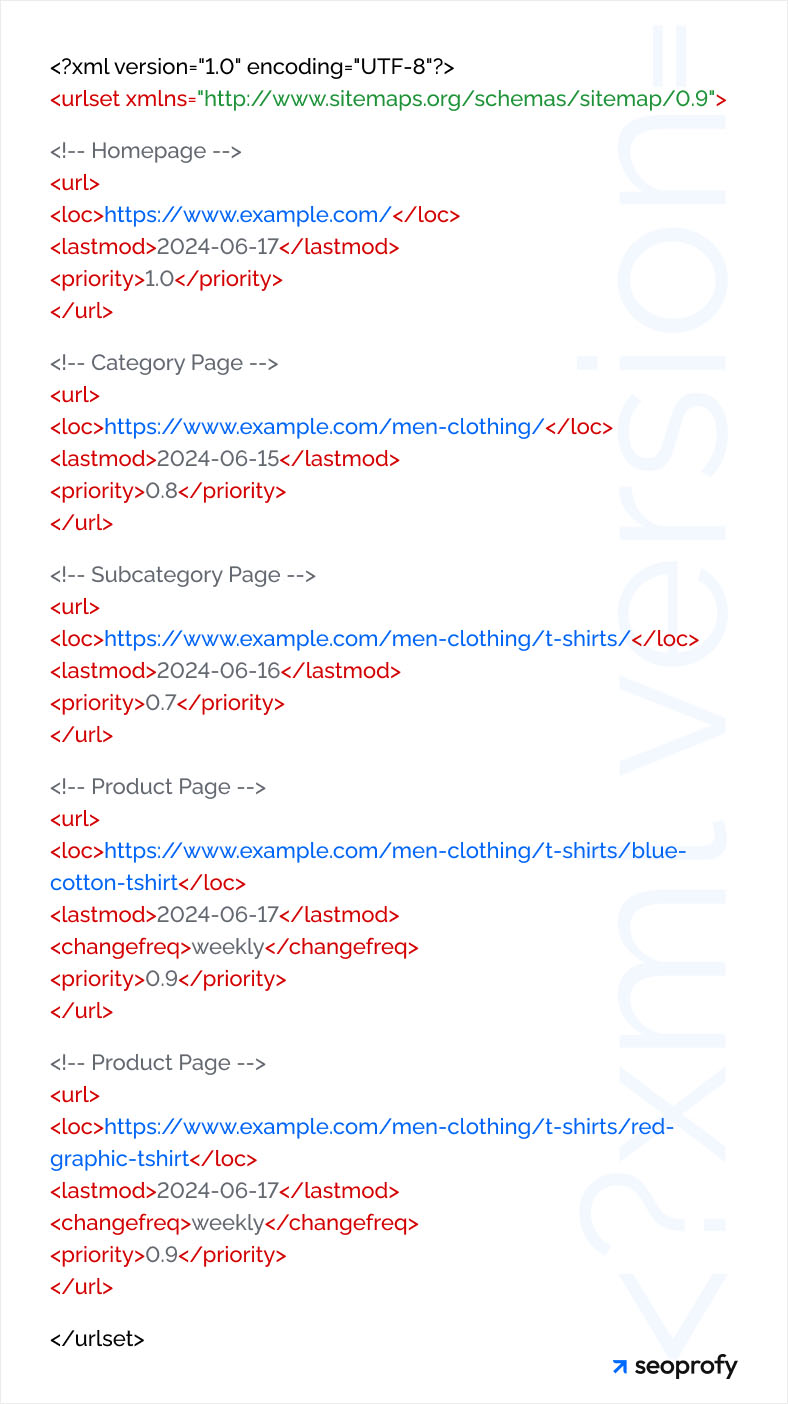

Create and Submit XML Sitemaps

As we’ve already mentioned, a sitemap is a file that provides information about the pages, videos, and other content on your site, as well as how these web addresses are connected. Google uses this file to crawl your site.

It shows which pages are most important and provides details like when they were last updated. Sitemaps are especially useful for ecommerce sites with many product pages, categories, and blogs that might not all be linked clearly through your site’s menus.

You don’t need to create an XML sitemap manually. Tools like Yoast SEO (for WordPress) or Screaming Frog can generate one for you automatically. Many content management systems (CMS) such as Shopify and Wix also include built-in sitemap features.

You’ll need to list all important pages with a 200 status code. These are your product and category pages and other content, such as blog posts. Do not include web addresses with 404 errors or duplicate content.

Once your sitemap is ready, go to Google Search Console and upload it in the Sitemaps section. This lets Google know where to find it so they can start crawling your site.

Try to keep your sitemaps updated and relevant. Whenever you add or remove pages on your site, you need to add these changes there, too. Many tools allow you to do this automatically.

Configure Robots.txt and Meta Tags

Optimizing your e-commerce site’s robots.txt file and meta robots tags is essential for effective SEO. These tools help guide search engine crawlers, ensuring your website’s most valuable pages are indexed and visible to potential customers.

The Role of Robots.txt in SEO

The robots.txt file instructs search engines on which parts of your website they should or shouldn’t crawl. This is especially important for e-commerce sites with numerous pages, as it helps prioritize resources and focus on high-value areas of the site.

Best Practices for Robots.txt

- Block non-essential pages, such as shopping cart, checkout, and account sections, which offer little value in search results.

- Ensure crawlers can access important assets like stylesheets, scripts, and images, which are necessary for rendering and evaluating your site properly.

Using Meta Robots Tags for Page-Level Control

Meta robots tags allow for granular control over individual pages, helping manage what gets indexed by search engines.

Best Practices for Meta Robots Tags

- Use the noindex directive for pages that don’t contribute to SEO, such as duplicate product pages, out-of-stock items, or internal search results.

- When a page contains useful internal links but isn’t meant for indexing, combine the noindex directive with a follow instruction, ensuring link equity flows to important pages.

Why These Configurations Matter

By carefully configuring robots.txt and meta robots tags, you ensure search engines focus on the pages that matter most. This not only conserves your site’s crawl budget but also improves visibility for key product and category pages, ultimately driving more organic traffic and boosting sales. Proper configuration is a critical step toward maximizing your site’s SEO performance.

Fix Crawl Errors

Crawl errors can stop search engines from accessing your website’s pages. When that happens, your products and important content might not show up. The good news? Most crawl errors are easy to spot and correct. Here’s what you need to know and how to handle the most common issues:

404 Errors (Page Not Found)

A 404 error happens when someone tries to visit a page that doesn’t exist anymore. This could happen if you’ve removed a product or changed a URL without redirecting it. You can find them using Google Search Console or Screaming Frog.

Once you’ve identified them, redirect those URLs to relevant pages on your site. For example, if a product is no longer available, redirect to a similar product or a category page. If there’s no relevant page, leave it as a 404, so Google knows the page is gone.

Soft 404 Errors

A soft 404 is a bit trickier. A soft 404 happens when a page doesn’t exist but still tells search engines it’s working with a “200 OK” status. This confuses crawlers and wastes time on pages that add no value.

Check for soft 404s in Google Search Console. If you moved the page, set up a “301 redirect” to the new URL. And if that page doesn’t exist anymore, return a proper “404 Not Found” or “410 Gone”.

Server Errors (5xx)

Server errors happen when your website can’t handle a request properly. These errors can block search engines and users from accessing your pages. Although not a critical issue, if these issues stick around, crawlers may visit your site less often, affecting your ecommerce SEO performance.

You can check Google Search Console for any server error reports. Additionally, talk to your hosting provider or developer to figure out what’s causing the problem—it could be a plugin, too much traffic, or outdated code.

To make your server stronger, try to add caching, use a CDN, or upgrade your hosting plan to handle more visitors. Keep an eye on server logs to catch and fix issues early before they influence your site.

Techniques to Improve Crawlability

Now that you know how important crawling and indexing are, let’s look more at what methods you can use to improve them:

Manage Crawl Budget

We’ve mentioned earlier that the budget Google has to crawl your site is limited. So, if you have hundreds or thousands of pages, you need to guide crawlers to spend their time on those that matter most, like your products and categories, instead of unnecessary ones.

Check Google Search Console to see how search engines are crawling your site. If they’re spending time on pages that aren’t important, such as outdated URLs, you can block or clean up those areas to make crawling more efficient.

Crawlers tend to revisit pages that are updated often. Refreshing product descriptions, adding new arrivals, or posting fresh blog content can signal that your site is active. This encourages search engines to prioritize these pages during their crawl.

You’ll also want to consolidate duplicate content pages like those created by filters or sorting options that can waste your crawl budget. This leads us to our second technique.

Handle Duplicate Content

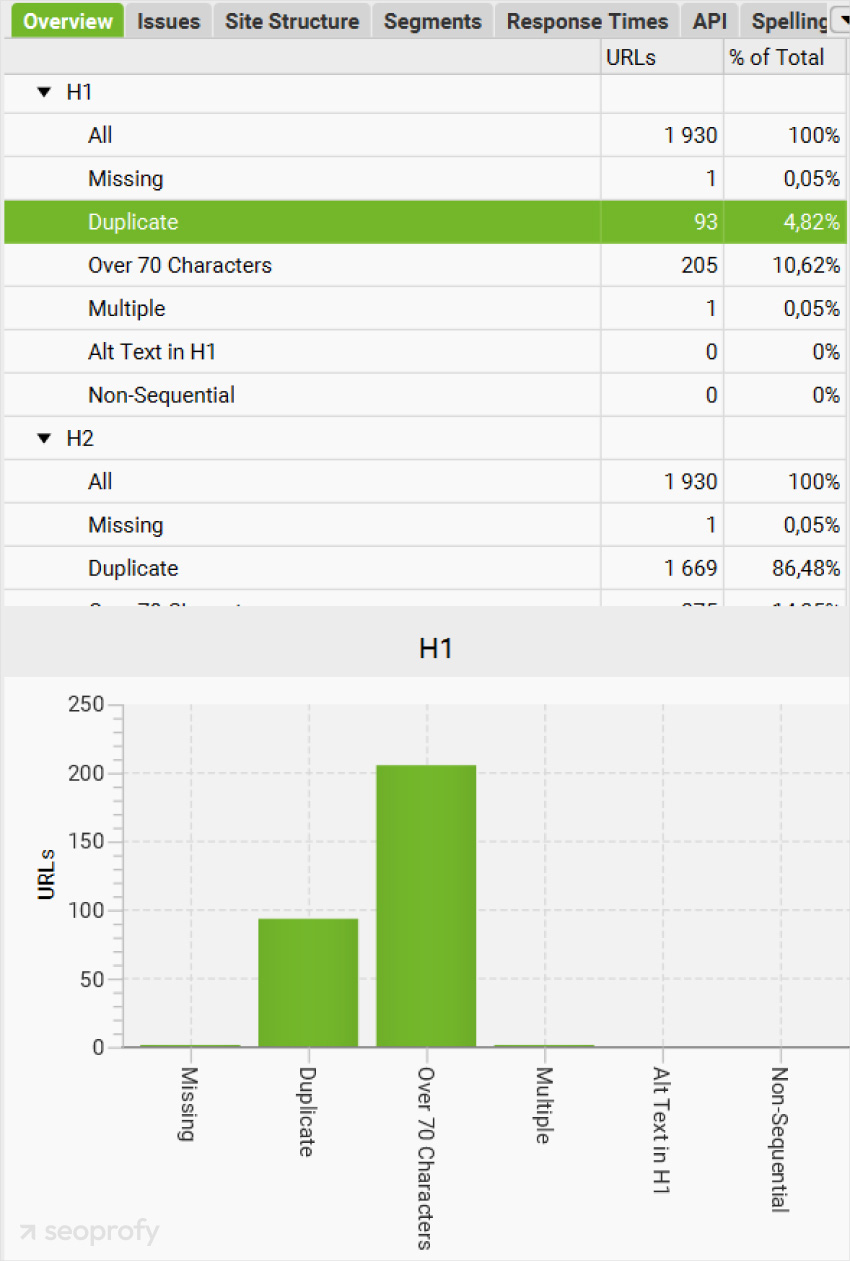

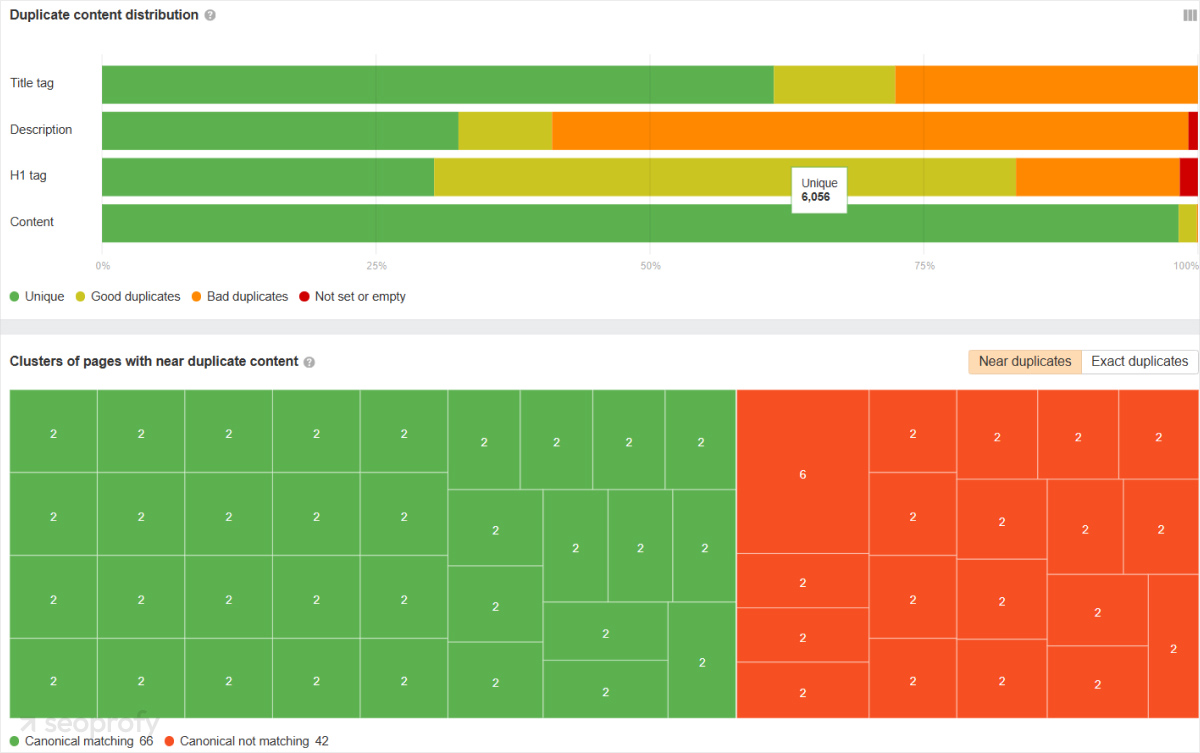

Duplicate content shows up when the same or very similar text is found in multiple places, either on your own site or other websites. This can make Google unsure about which version to rank, splitting link value and lowering your rankings in search engine results pages.

To fix this, you can use tools like Screaming Frog or Ahrefs Site Audit. Let’s start with Screaming Frog. You’ll need to download their SEO Spider to begin.

Once downloaded and installed, open the tool and enter your website’s URL. Then, hit start the crawl. Please note that this tool is free up to 500 pages. If you have more than that, you might need to purchase their license to analyze the entire site. After the crawl is completed, you can:

- Navigate to the Content/Headers section

- Use the Filter dropdown menu at the top

- Select Exact Duplicates to see pages with identical content

- Export the data and map out a plan on how to fix these issues

You can also do it in Ahrefs. Navigate to Site Audit and start a crawl of your website. Next, go to the Content Duplicates report.

In the report, Ahrefs highlights duplicate or near-duplicate pages. Clusters without proper canonical tags are marked in orange, so they are easy to spot.

Click on a duplicate cluster to see which pages have the issue. This might happen because of duplicate meta descriptions, titles, or body text. You have a few solutions to go about this:

- Add canonical tags: These tags tell Google which page to treat as the main one, especially if the page shows up under different URLs due to filters or tracking parameters.

- Use 301 redirects: For duplicate pages that no longer need to exist, use a 301 redirect to send both search engines and users to the preferred version.

- Manage URL parameters: Dynamic URLs with parameters (like filters or sorting) can generate unnecessary duplicates. Use Google Search Console to set rules for how search engines handle these.

- Write distinctive descriptions: Try not to rely on manufacturer-provided text and create original product descriptions. AI tools can help you speed up this process.

- Hreflang for regional pages: If you have pages that target different regions or languages, use hreflang tags to show Google they are unique variations, not duplicates.

Use Internal Links Strategically

Internal links connect pages on your site, usually through clickable text or phrases. They help spread link equity, attract more organic traffic to other pages, and show crawlers how your site is organized.

You can also use them to suggest related products or best-sellers and increase the chances of additional sales. Here are some useful tips you can follow for internal linking:

Use Descriptive and Relevant Anchor Text

The words you use for links, known as anchor text, matter. Descriptive anchor text tells both search engines and users what the linked page is about. Instead of generic phrases like “click here,” use text that matches the page’s content, say “Best-Selling Summer Dresses.”

Link to Important Pages from the Ones with High-Authority

Your homepage or main categories can pass value to the web addresses they link to. Use these pages to link directly to your best-selling products, seasonal promotions, or most valuable categories. For example, from your homepage, link to your “New Arrivals” or “Top Deals” section.

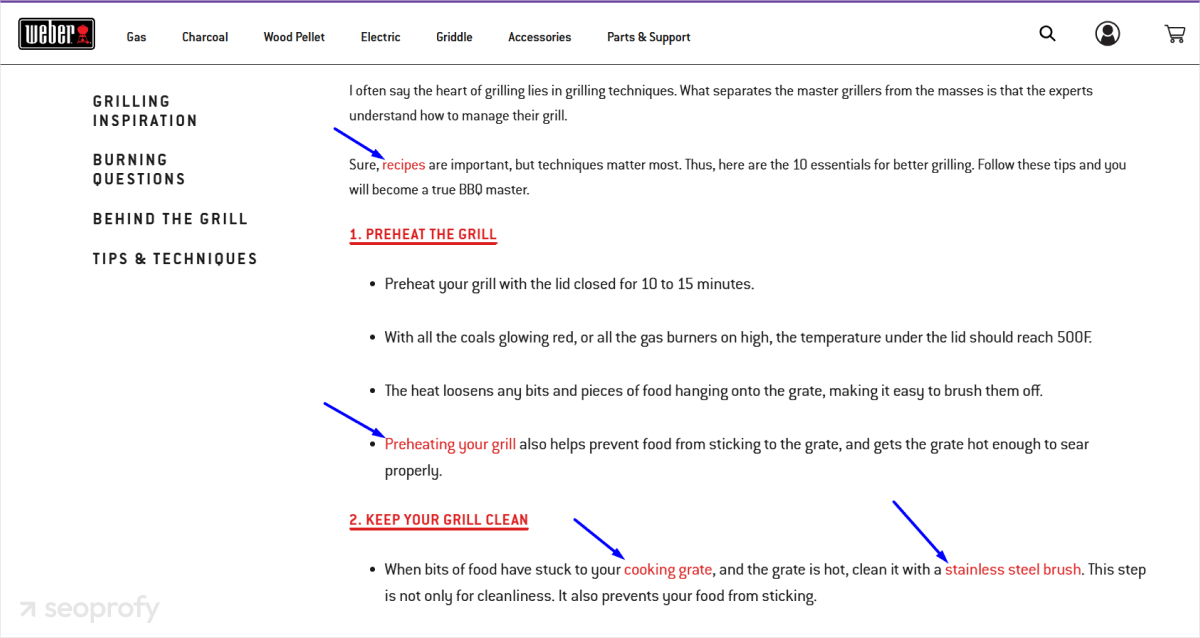

Create Topic Clusters

Group-related content and interlink pages that go into the same category or topic. For example, if you sell grills, a blog post about grilling tips could link to pages for cleaning tools, barbecue utensils, and recipes. Here’s how Weber, a well-known company that sells grilling equipment and accessories, does it:

You can use your CMS to add “related products” sections or manual links in product descriptions. Some platforms, like Shopify or WooCommerce, have built-in features for this.

Audit Your Internal Links

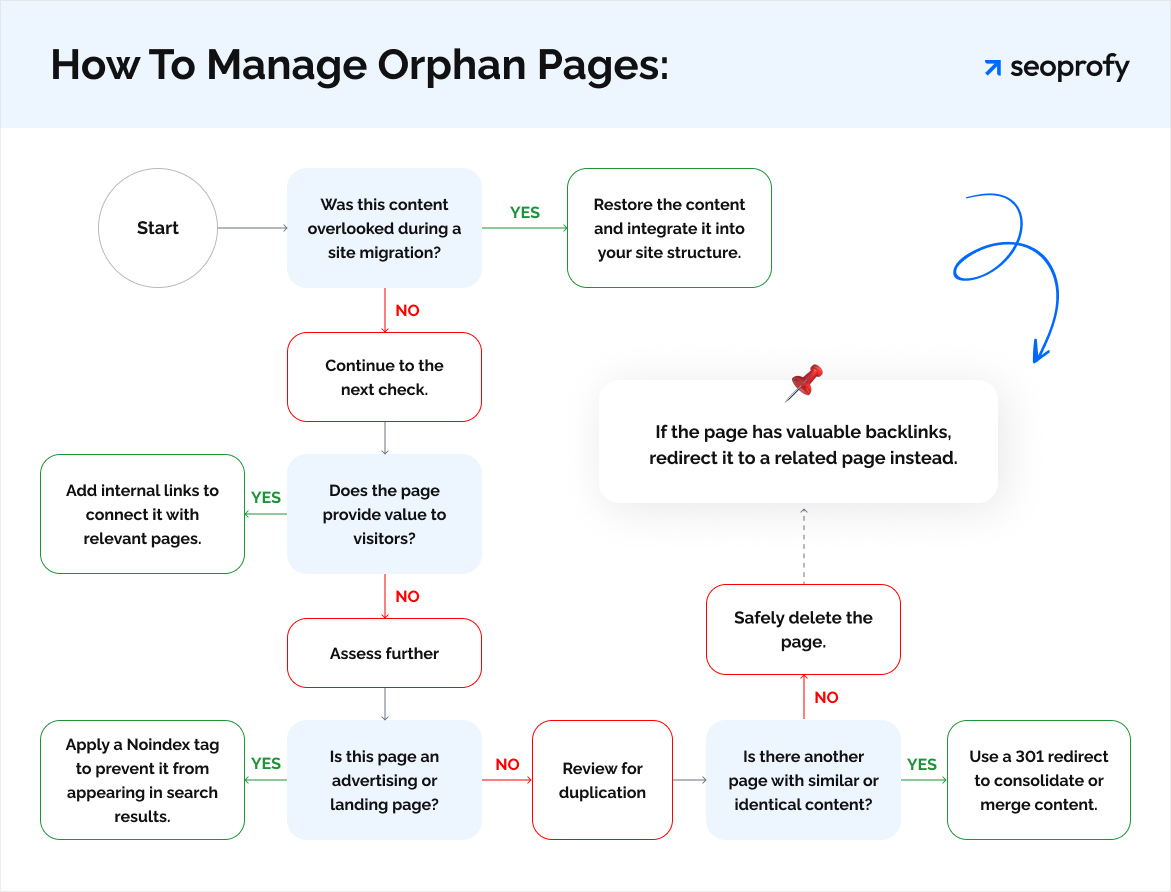

It’s a beneficial practice to audit your internal links regularly and update them if necessary. Once again, you can utilize Screaming Frog and Ahrefs’ Site Audit tool for this purpose. While you’re at it, you can also check for orphan pages.

These are web pages that have no links pointing to them. It happens if old pages are left published without links, out-of-stock products are still live, or your CMS generates extra URLs automatically. This generally isn’t a big problem, but too many orphan pages can waste your crawl budget and confuse users.

If you plan to use Screaming Frog for your audit, you’ll first need to connect your Google Analytics and Google Search Console accounts. In case you encounter any orphan pages, you can choose of the following scenarios:

- Delete them

- Add noindex tag

- Merge or consolidate

- Add internal links

Advanced Indexing Tactics

All that we’ve covered so far is excellent for most websites, but what about those that are a little more complex? If your ecommerce site has dynamic content, filters, or is built on JavaScript, you’ll need a few extra tricks up your sleeve.

Pre-Render or Use Static Site Generation

Ecommerce websites that rely heavily on JavaScript can be trickier for search engines to crawl. Sometimes, they can’t access parts of your content. And pre-rendering or static site generation can help fix this.

Static site generation creates HTML versions of your pages during the build process instead of generating them dynamically when a user visits. This means your web addresses are pre-built and ready for search engines to crawl right away. These static HTML pages can also be cached by a CDN (content delivery network).

There are certain tools, such as Rendertron or headless CMS platforms, you can use for this. Although these may require some beginner knowledge in coding.

Handle Pagination and Filters the Right Way

Pagination and filters are something every ecommerce store has, but they can lead to duplicate pages or thin content that harms SEO. For example, search engines might treat “Sort by Price” or “Sort by Popularity” as separate, unique pages, which wastes your crawl budget.

If your site has multiple pages for the same category, make it clear that they are connected and belong to the same section. Also, you can use the URL Parameters Tool in Google Search Console to handle links with extra details such as color and size.

Get Your Pages Indexed Faster

It can feel like forever for new products or updates to get indexed. While Google’s bulk indexing API isn’t an option right now, you can still speed things up.

Submit new or updated URLs in Google Search Console (you can do this for 10-15 URLs at a time). It’s not much, but if you have a seasonal promotion, it can definitely help. You can also share your content on social media or add it to your sitemap to attract search engine crawlers faster.

Conclusion

Indexing is one of those behind-the-scenes aspects of ecommerce SEO that often gets overlooked. But it is crucial for online stores. The more pages get indexed, the better your chances of being seen by potential customers—and that’s what leads to more sales.

When your site structure is clear, your sitemaps are up to date, and any crawl errors are cleaned up, it’s much easier for search engines to find and display your products.

And with the strategies we outlined today, you now have a roadmap to get your entire ecommerce catalog indexed and ranking in search. To take it even further, you can check out our extensive SEO guide for ecommerce to optimize your store for better visibility.

If you need some expert help along the way, schedule a free consultation with our agency. Our SEO team has expertise in technical SEO and knows how to optimize even the most complex online stores to drive more traffic and purchases.