Technical SEO for ecommerce sites is the silent engine behind high-performing online stores. Without proper structure, search engines won’t notice even the most attractive storefront. Ecommerce platforms present unique technical challenges — endless product pages, complex filters, and third-party scripts that slow performance. If your site isn’t technically optimized, Google may crawl the wrong pages, ignore important ones, or struggle to load your content altogether.

This guide is built for ecommerce business owners, SEO specialists, and developers who want to have scalable websites that rank and convert. We’ll walk through essential technical SEO components and platform-specific fixes and show how technical SEO audits for ecommerce uncover hidden problems that negatively affect your rankings.

- Technical SEO for ecommerce significantly impacts visibility — 30% said it’s their biggest factor in better search rankings.

- Without proper crawling and indexing, your products are invisible to search engines.

- Duplicate content issues are very common in the ecommerce industry and can negatively affect your positions in Google results.

- XML sitemaps guide search engines to all your essential product and category pages.

- Clear, readable URLs help both shoppers and search engines understand what they will find on each page.

- Site speed can make or break sales, as the vast majority of shoppers abandon slow-loading websites.

Key Technical SEO Components

Every site relies on a solid technical foundation to stay visible in search results. In fact, 30% said that technical SEO improvements had the biggest impact on increasing their search rankings.

While content and backlinks often get the spotlight, it’s the backend elements (how your site is structured, loaded, and rendered) that directly impact how well Google can crawl and index your pages. Ecommerce websites, in particular, have to deal with dynamic URLs, paginated content, and faceted navigation.

Understanding and implementing these ecommerce SEO components ensures your store is not just searchable but competitive.

The first and most critical step? Ensuring your site can be crawled and indexed correctly.

Crawlability and Indexability

Crawlability is how easily search engines like Google can access your site, while indexability determines whether those pages are stored in Google’s database. If either fails, your products won’t rank, no matter how great your content strategy for ecommerce is.

For ecommerce websites, crawlability is often blocked by:

- Poorly configured robots.txt files

- Incomplete or missing sitemaps

- Overuse of session IDs or URL parameters

Indexability issues frequently arise from:

- Duplicate content without canonical tags

- Noindex meta tags applied mistakenly

- Soft 404s and broken internal links

You can use Google Search Console and crawl tools like Screaming Frog to detect and fix these issues. Make sure your important product and category pages are accessible, properly linked, and included in an XML sitemap.

To fix crawl issues and boost rankings, build a solid technical SEO foundation tailored to Shopify, Magento, or WooCommerce. Start reaping the following benefits with expert-led ecommerce SEO:

- Higher product page rankings

- Increased visibility in Google Shopping & organic results

Sitemaps and Robots.txt Configuration

An XML sitemap is another critical aspect of technical SEO for ecommerce and acts like a roadmap for search engines, pointing them toward the most important pages on your ecommerce site. For stores with thousands of products and categories, this isn’t optional — it’s essential. A well-structured sitemap ensures that new, seasonal, and high-priority pages get discovered and indexed faster, especially when internal linking alone isn’t enough.

Your sitemap should:

- Include all indexable products, categories, and blog pages.

- Exclude filtered, duplicate, and out-of-stock product URLs.

- Be dynamically updated as your site grows or changes.

- Be submitted to Google Search Console and Bing Webmaster Tools.

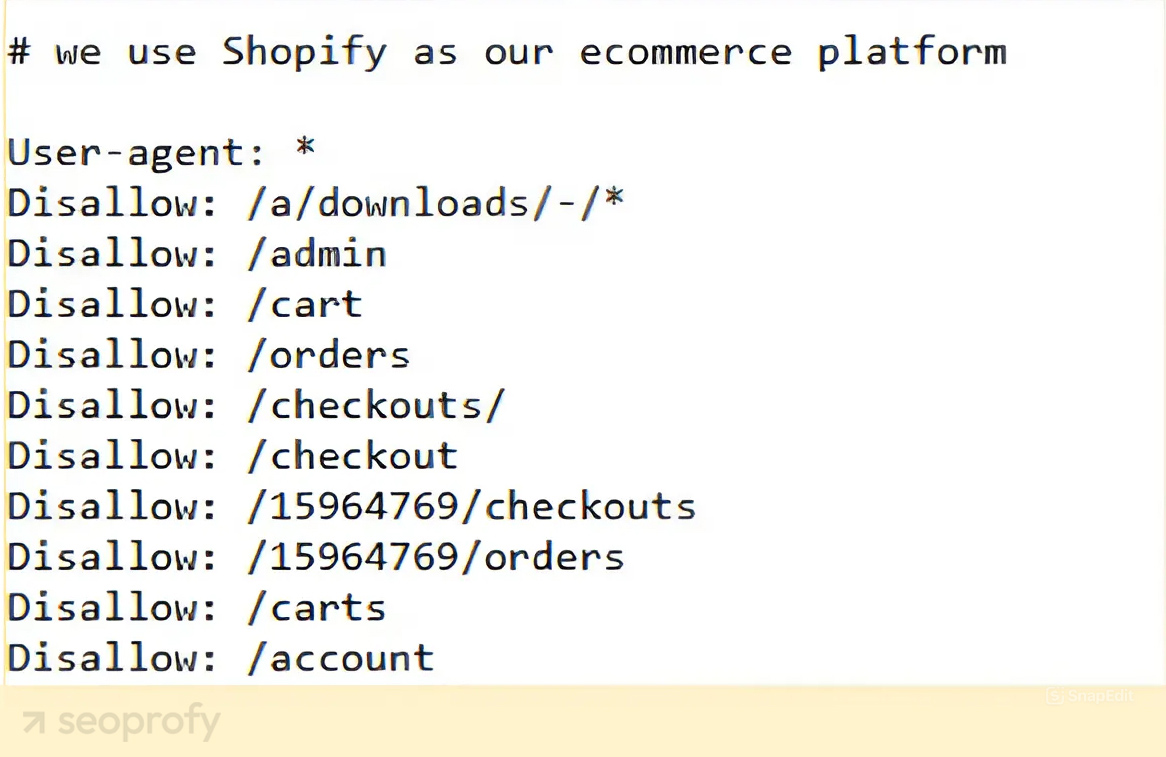

The robots.txt file, on the other hand, is your gatekeeper. It tells crawlers where they are not allowed to go. This is critical for managing crawl budget — especially for ecommerce sites with faceted navigation and endless parameter-based URLs.

A properly configured robots.txt should:

- Block crawl access to non-essential paths like /cart/, /login/, and internal search results (/search?query=…).

- Allow access to key areas like product and category pages.

- Avoid disallowing important scripts (like CSS or JS) that help Google render the page correctly.

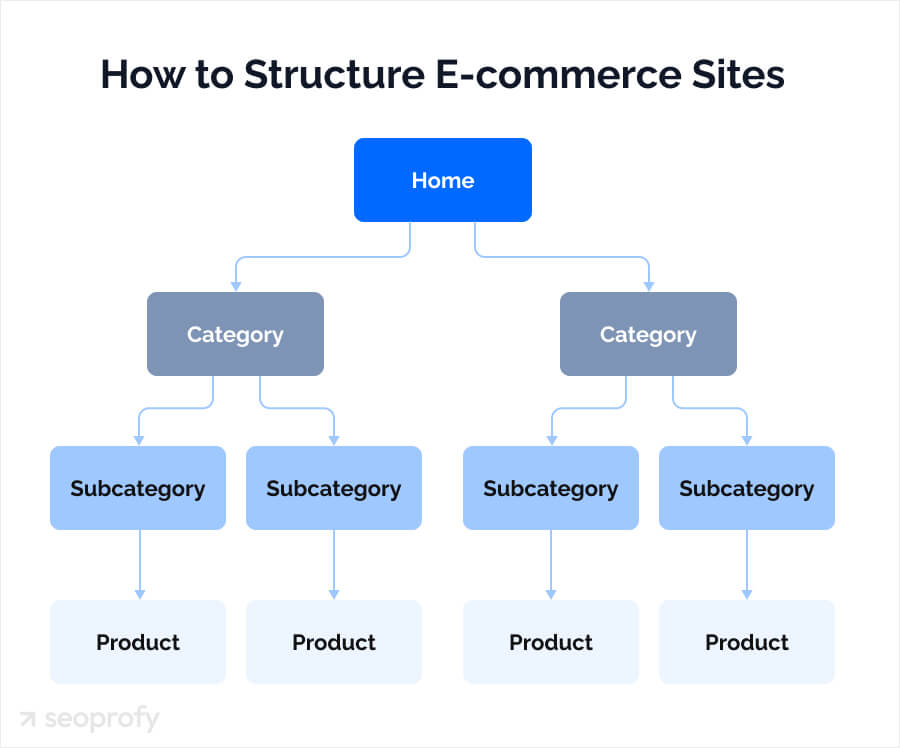

Site Architecture and Internal Linking

The website architecture should be understandable for Google, as it affects the speed of indexing pages and link equity distribution between them. A well-structured architecture improves Google’s crawl efficiency by making content easier to access and reducing unnecessary paths.

Key architecture tips:

- Use logical category > subcategory > product hierarchies.

- Avoid orphan pages; every product should link from somewhere.

- Include an HTML sitemap for large inventories.

For example, an optimal architecture might look like this:

Home → Category → Subcategory → Product Page

This hierarchy should also be reflected in your URLs. For example:

/men/shoes/sneakers/nike-airmax

Internal links pass authority and guide visitors. Link products to related items, use keyword-rich anchor text, and connect blog posts to categories or product pages.

Run regular audits with tools like Screaming Frog to fix broken or underlinked pages. Strong architecture and linking boost crawlability, rankings, and conversions.

Crawling and Indexing Best Practices

To make sure that all important pages of your online store or site are indexed, follow these technical recommendations.

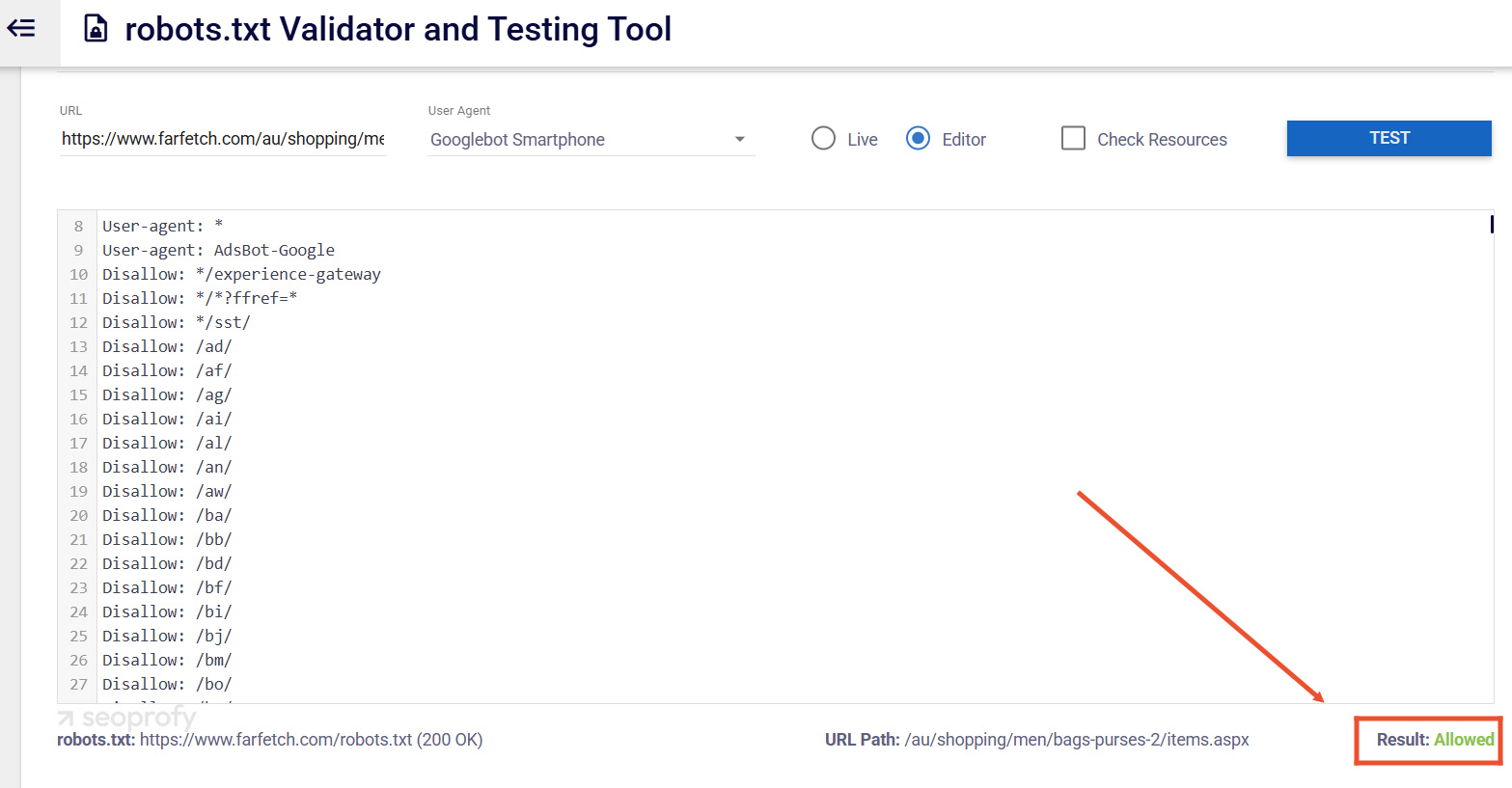

1. Make sure important pages are not blocked from crawling

Robots.txt is a text file located at the root of your website at https://mysite.com/robots.txt. As we mentioned, this file tells search engines which sections they are allowed to crawl and which are restricted.

But, sometimes, by default, this file may contain lines like “Disallow: /catalog/” or “Disallow: /blog/” which prohibit crawling and indexing of the product catalog and blog, respectively.

To check if a page has these restrictions and fix them:

- Enter https://mysite.com/robots.txt in your browser, replacing mysite.com with the actual address of your website

- Look through the file for lines like Disallow: /catalog/, Disallow: /blog/, and others that contain the Disallow command and lead to critical sections of your site

- If such lines are found, either remove them yourself or consult the developers who worked on your website

On top of that, you can use the robots.txt tester tool to understand exactly how Google perceives your robots.txt file.

2. Use correct robots meta tags

Robots meta tags control how search engines interact with each page. Unlike robots.txt, which applies to the whole site, these tags work on individual pages.

Check your product pages for tags like <meta name=”robots” content=”noindex”>. If they are present, it’s a problem because search engines simply won’t include this page in search results.

In general, most product and category pages, as well as blog posts, should have no robots meta tag (which means search engines will index and follow links by default) or use <meta name=”robots” content=”index, follow”>. This way, search engines will index the page and follow its links.

And be careful with pagination pages, filtered product views, and shopping cart pages, as they often create duplicate content. We suggest using noindex, follow, so they don’t appear in search results, but Google can still find and follow links from them.

3. Add pages to sitemap.xml

When you manage your sitemap, make sure it includes everything that matters for your store:

- All product pages

- Category pages

- Blog posts, as they drive traffic

- Pages like About, Contact, and FAQs

If you add or remove products, your sitemap should be updated accordingly to reflect these changes. And whenever you make bigger updates to your site, go to Google Search Console and submit your new sitemap. This helps Google discover your latest content more quickly.

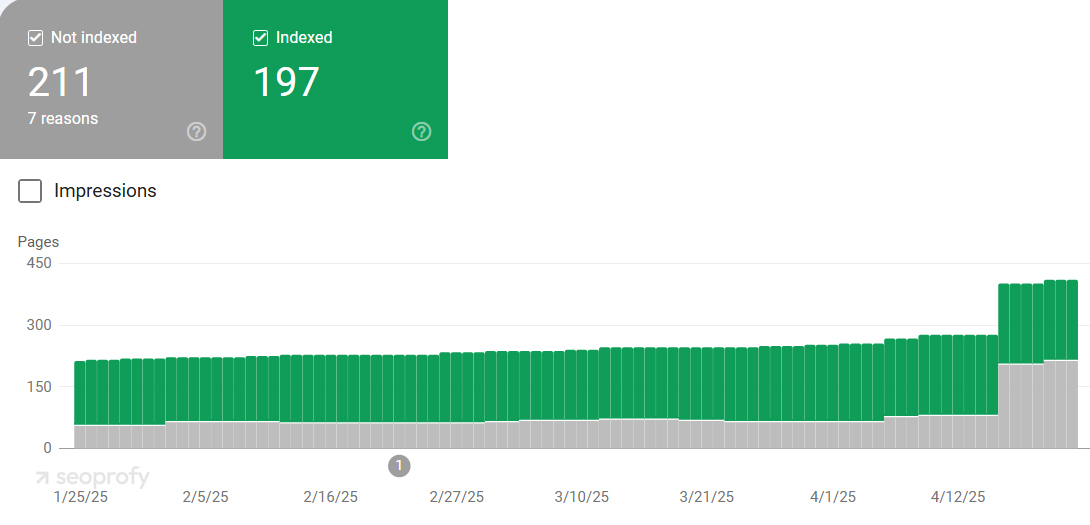

4. Check indexing manually

We also recommend periodically checking that your pages are actually being indexed. The simplest way is to type “site.com” in Google search to see how many pages are indexed.

If you prefer to use more advanced and reliable techniques, consider Google Search Console’s “Coverage” report. It shows which pages are indexed and which have problems.

If you don’t see some of your key pages in the results, double-check their robots meta tags, see if they’re included in your sitemap, and make sure they’re linked from other parts of your site.

URL Structure and Navigation Optimization

Good URLs and navigation bring a double benefit: they make your store more visible on Google and simplify shopping for your customers. A perfect URL is one that is clean and descriptive.

Compare these two URLs:

mystore.com/womens-shoes/running-sneakers/blue-swift-trainers

mystore.com/p?id=57294

It’s the first variant that explains to both shoppers and search engines exactly what’s on the page.

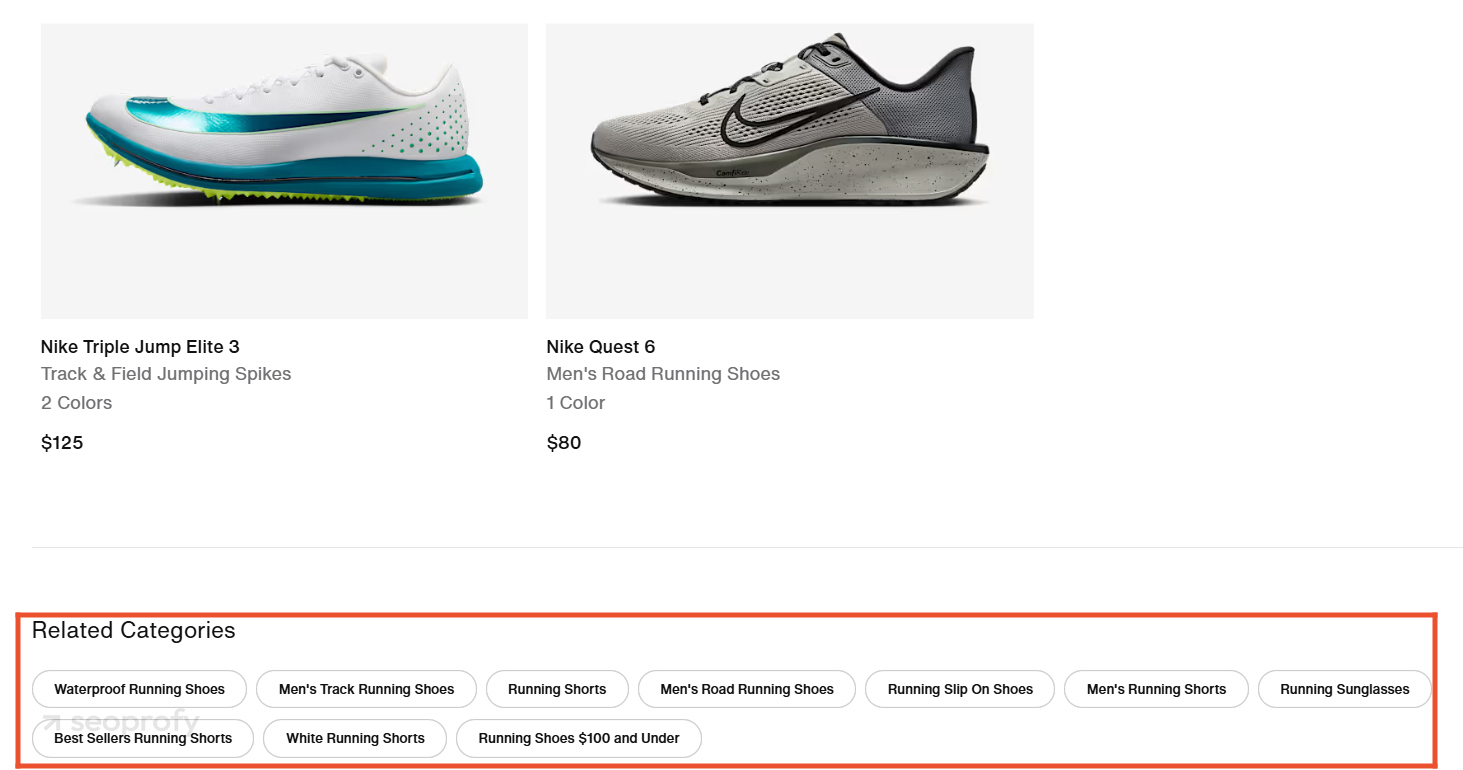

The same relates to your entire site structure. Your categories must be logical and branch into subcategories.

Yet, good navigation can still have hidden problems. Filters and sorting options often create multiple URLs that show identical products, which confuses search engines about which version to rank. Later in this article, we’ll discuss how to solve this issue.

Internal linking is another part of technical SEO for ecommerce, and it implies connecting your web pages to each other. For instance, you could connect a page with running shoes to related categories. This lets shoppers discover more items and provides search crawlers with more paths across your website.

Page Speed and Performance Enhancements

Your page loading speed is what your customers won’t notice until it’s high, but they will certainly notice when it’s not. If your pages take too long to appear, you risk losing a significant number of sales. A Shopify survey of 750 consumers and 395 marketers revealed that when a website loads slower than expected, 45% of users are less likely to make a purchase, and 37% are less likely to revisit the site.

Google also pays attention to this aspect. And this also relates to the mobile version of your site. After all, about 70% of people shopping online now browse ecommerce sites using their phones.

So, when your pages load fast, it’s a double win: happy customers and better visibility. Here’s how you can achieve these two goals.

Image Optimization Techniques

Great photos sell products, but they can also slow down your site. You should find a balance between quality and file size: crisp, detailed images that load in milliseconds. Take a look at a couple of image optimization best practices:

- Resize images to the exact size needed: If your product image area is 800×600 pixels, don’t upload a 3000×2000 picture. The browser still has to download the larger file and then resize it, so it’s a waste of time and resources.

- Compress your images: Tools like TinyPNG or ImageOptim can reduce file size without noticeable quality loss.

- Convert images to modern formats: WebP images are typically smaller than JPEGs but have the same quality.

- Enable lazy loading: With this technique, your images will load only when customers are about to see them.

- Consider a CDN: Content delivery networks place copies of your images on servers around the world, so they load faster for visitors nearby.

You can always opt for ecommerce SEO services and hire specialists who’ll advise you on the most optimal way to improve your site speed.

Browser Caching

Without caching, shoppers would have to download the same files every time they return to your site later. And that’s a lot of unnecessary waiting.

But with browser caching, when someone visits your store for the first time, their browser will download all your images, JavaScript files, etc. The next time the customer opens the same pages, their browser will already have these files saved, and, thus, your pages will appear almost instantly.

However, what exactly must be cached?

- Static images and logos: These rarely change, so they can be cached for a longer period, like a month or even a year.

- Product images: These might change seasonally, so have a middle-ground approach: cache them every few weeks.

- Dynamic content like pricing: This should have minimal or no caching to ensure customers always see current information.

Reducing Server Response Time

Before your customer sees anything on your store, your browser will request information from your server and then wait. This period is your server response time, and it affects every single page on your site.

The challenge is that it happens before any content starts loading. You can optimize every image, but if your server takes a long time to respond, customers will still experience an initial delay.

Several factors influence your server response time:

- Hosting quality: Budget hosting is often about sharing server resources with hundreds of other websites. If one of them has a traffic spike, everyone suffers. Hosting with dedicated resources can make a dramatic difference.

- Database queries: Every time someone loads a category page or searches for a product, your site likely runs several database queries. Complex or inefficient queries can also make your customers wait.

- Traffic levels: If you run a Black Friday promotion, you might need temporary upgraded resources to handle the load.

Duplicate Content and Canonicalization Strategies

As we said, ecommerce site pages often create duplicate content, and this can be another problem when it comes to technical SEO for ecommerce. Let’s examine several methods that let you avoid duplicate content creation.

Canonical Tags

Canonical tags allow you to define which version of a page should be considered the “official” one when the same product appears in multiple places. The tag itself is a single line of code in your page’s header.

When do you need these tags? Your ecommerce site creates duplicate or similar content in several scenarios:

- When products appear in multiple categories

- On filtered product pages (by size, color, price, etc.)

- In search result pages

- On paginated category pages

- Through URL parameters (like tracking codes or session IDs)

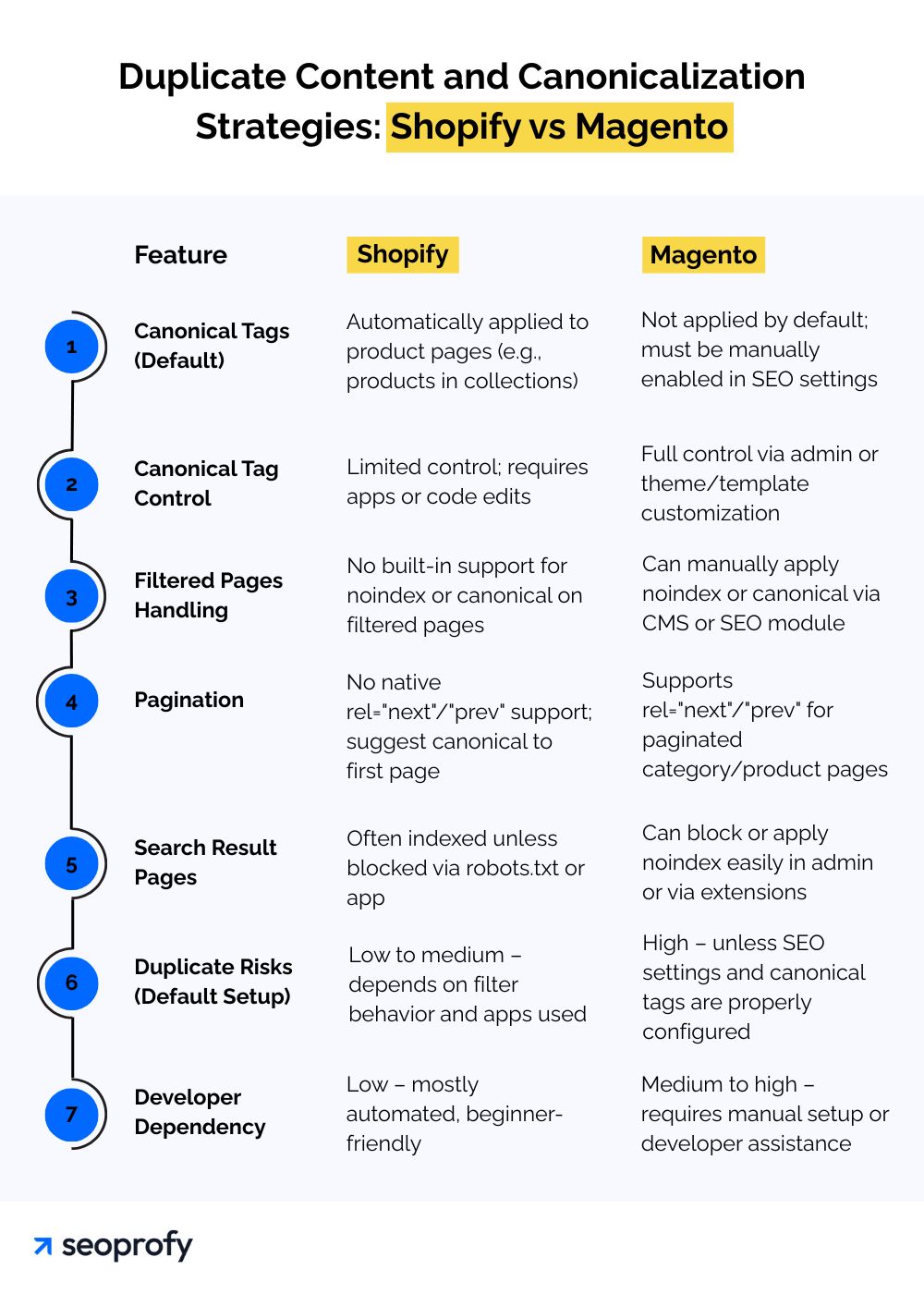

Different content management systems (CMS) handle this issue in their own ways, so you should also understand how your platform works. Magento and Shopify, two of the best ecommerce platforms for SEO, have different approaches.

With Magento, you often need to manually configure canonical tags and URL structures. This gives you more control but requires more technical knowledge.

Shopify, on the other hand, applies canonical tags to product pages in collections, but you may still need to manage custom canonicals for filtered or parameterized URLs.

Parameter Handling in URLs

Extra bits of text after the question mark in your store’s URLs might seem harmless, but session IDs can be particularly problematic for your technical SEO for ecommerce, as they create unique URLs for every visitor.

Some ecommerce owners wonder why they can’t just block parameters with robots.txt. While this prevents crawling, it doesn’t solve the underlying issue — search engines might still index these URLs if they’re linked from elsewhere. So, the following parameter handling instructions are more effective:

- Identify parameter types: Know which ones change content (color=blue) or just reorganize it (sort=price)

- Use Google Search Console: The URL Parameters tool lets you specify exactly how to handle each parameter on your site

- Add noindex tags: These are for filtered pages that shouldn’t appear in search results but are useful for shoppers

- Implement AJAX filters: They update page content without changing the URL, eliminating parameter-based duplicates

- Maintain clean canonical tags: Point all variations to your preferred “clean” URL

- Be consistent with internal links: Use parameter-free URLs when linking between pages on your site

- Consider “rel=nofollow”: Add this to internal links that must include parameters

JavaScript SEO for Ecommerce Platforms

JavaScript technical SEO is worth mentioning separately because modern online stores use JavaScript to create smooth shopping experiences with the help of instant filters, quick-view popups, and interactive galleries.

The catch is that when Google first visits your site, it sees the basic HTML before processing any effects enabled by JavaScript. If your product information only appears after JavaScript, search engines might miss it.

To ensure search engines notice all the important information, try the following:

- Keep product names, descriptions, and prices in the basic HTML.

- Use the URL Inspection Tool in Google Search Console to see how Google renders your page, or try disabling JavaScript manually to test fallback visibility.

- Use proper headings and page structure.

- Try disabling JavaScript and check whether you can still see product information.

With good JavaScript SEO, you can have both a modern shopping experience and high search visibility.

Conclusion

There are multiple areas to focus on and techniques to ensure your ecommerce site works properly, and Google can crawl and index it. Plus, everything depends on what your site relies on — this can be a content management system like Shopify or Magento, or a custom-made website. Each case has its own challenges and best practices.

And while developers often handle many technical SEO tasks, such as site speed optimization or structured data implementation, they may not always take into account the bigger SEO picture.

SeoProfy is a professional SEO agency serving ecommerce sites for years with transparent SEO pricing. We work alongside developers to ensure technical details and search visibility go hand in hand, helping online stores attract more customers and increase sales. Contact us today, and our team will conduct a comprehensive technical SEO audit of your website, providing recommendations on how to address underlying issues and improve your search rankings.